Apache Flink is a stream processing framework, which is an open-source software delivered by Apache Software Foundation. Flink is a distributed processing tool that is used to process bounded data unbounded stream. We can deploy Apache Flink in almost all distributed computing frameworks and achieve an in-memory computation. Compared to Hadoop in which programs are divided into two parts and then process sequentially, all operations in the Apache Flink process in parallel make it lightning fast. Apache Flink can be easily deployed and run on various cluster management frameworks and the storage system which are Apache Kafka, Tez, YARN, and so on.

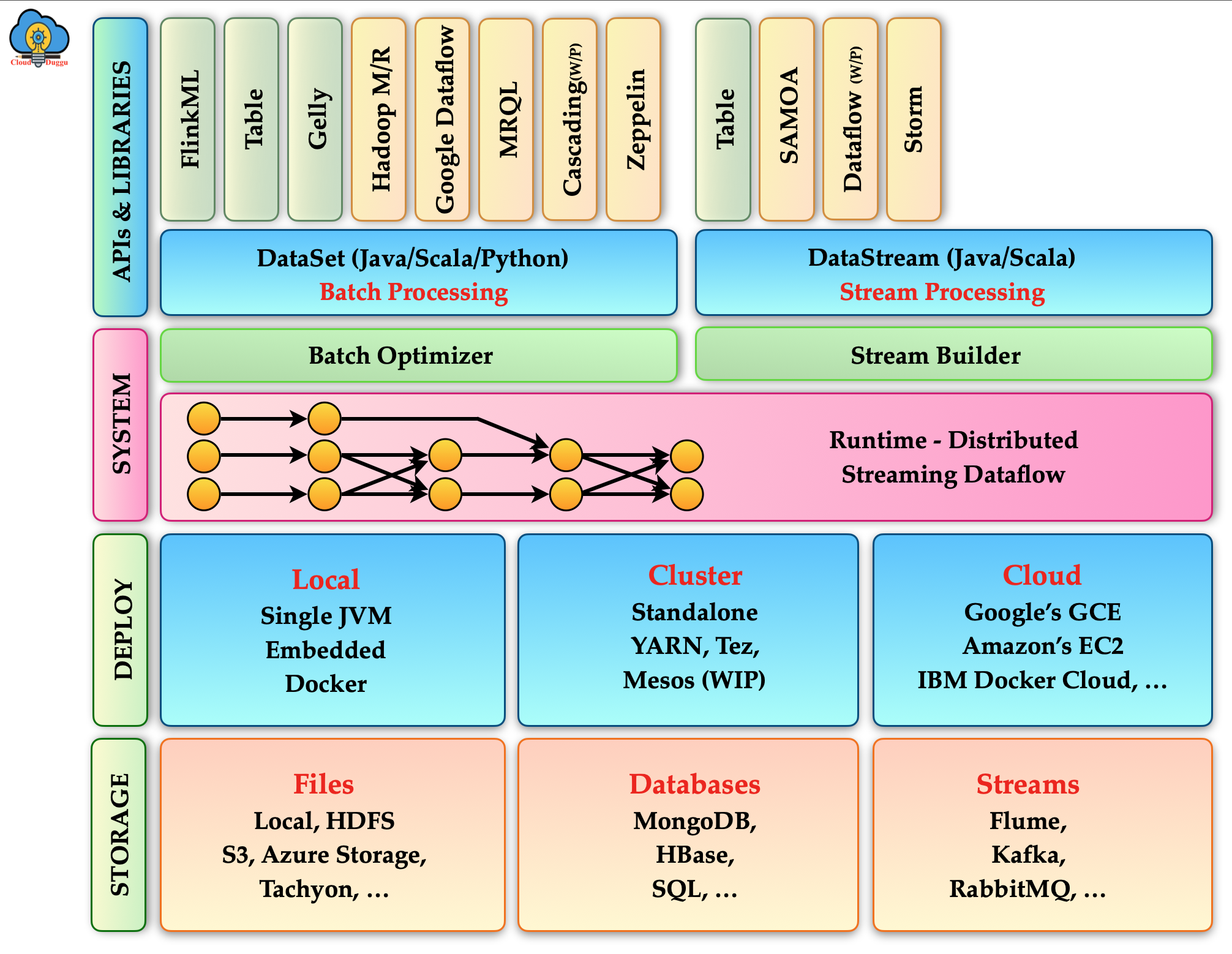

Apache Flink Component Layers

In the following figure, we can see the component stack of Apache Flink in which at the lower level we have a storage system in which we can see Flink supports different types of storage such as file systems, databases streams, and so on. After the storage layer, we see the deploy layer which shows that Apache Flink can be deployed local mode, cluster mode, and on the cloud system. After that, we see a system layer in which batch optimizer and stream builder are present for processing. After that, we see APIs and libraries, the most important layer of Apache Flink as these are used to generate the DataSet and DataStream API programs.

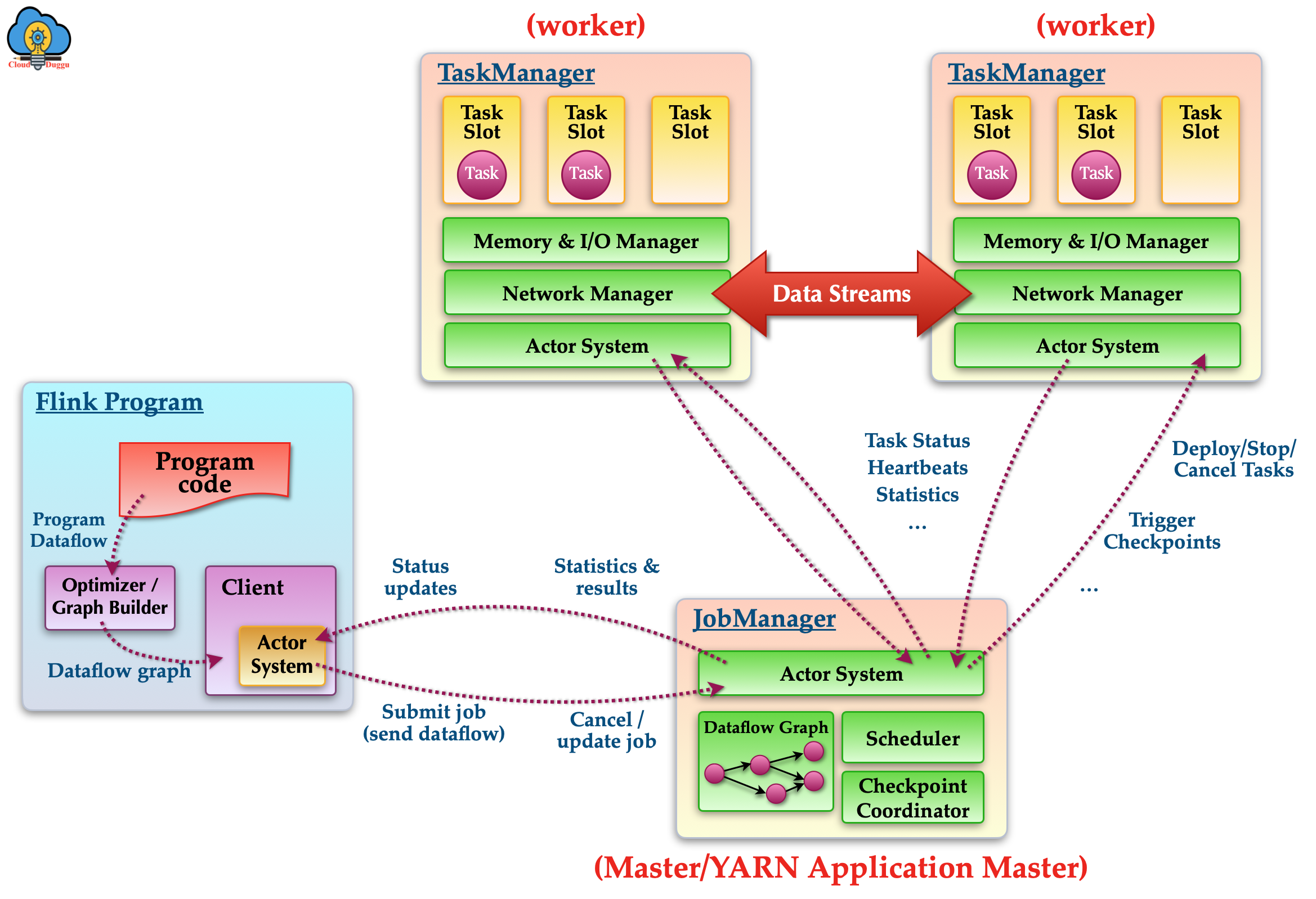

Apache Flink Architecture Overview

Apache Flink is a distributed stream processing system that works on Mater - Slaves fashion. The Master serves a Manager node whereas Slaves servers as worker nodes. The master receives the request from the client and assigns it to slave nodes for further processing. The Slave Nodes work on those assigned tasks and update to the Master node.

Apache Flink has the following two daemons running on Master and Slaves nodes.

1. Master Node(Job Manager)

The Job Manager daemons run on the Master node of the cluster and work as a coordinator in the Flink system. It receives the program code from the client system and assigns that task to slave nodes for further processing.

2. Slave Nodes(Task Manager)

The Task Manager demons run on the Slave nodes of the cluster and perform the actual operation in the Flink system. It receives the command from the Job Manager and performs the required action.

The following figure shows the architectural overview of Apache Flink and its coordination. The client system sends the program to the Flink cluster for execution. After that, the request is received by the Apache Flink Master Node and then the Job Manager daemon that is running on the Master node assigns that task to Task Manager daemons which are running on all Slaves nodes. The Slave nodes are performing the actual operation and sending the result to the Master node.

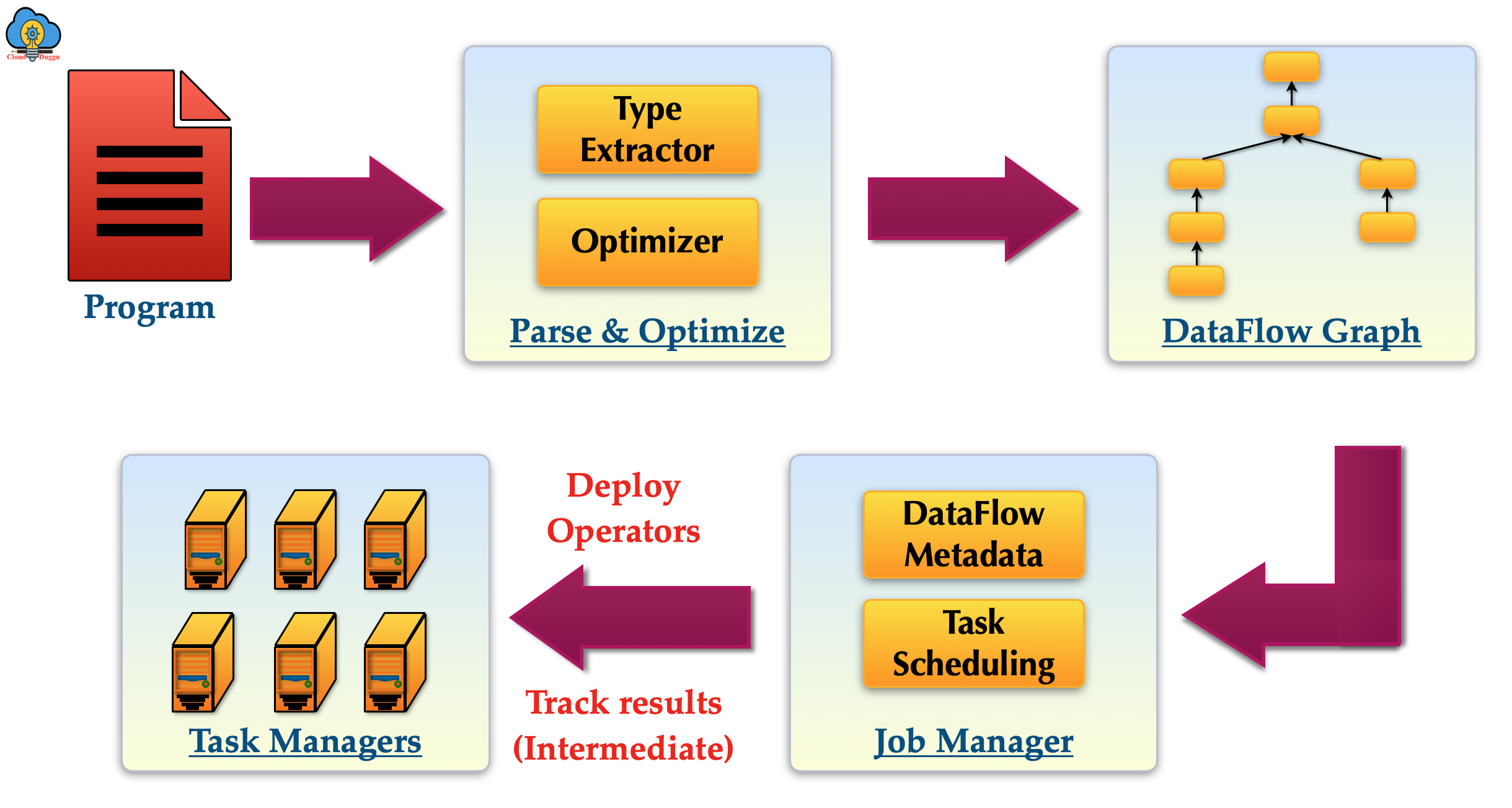

Apache Flink Execution Flow

Apache Flink executes the applications program in the following steps.

1. Program

It is the application-developed program that the client system will submit for execution.

2. Parse and Optimize

In this phase, the code parsing to check syntax error, Type Extractor, and optimation is done.

3. DataFlow Graph

In this phase, the application job is converted into a data flow graph for further execution.

4. Job Manager

In this phase, the Job Manager demon of Apache Flink schedules the task and sends it to the Task Managers for execution. The Job manager also monitors the intermediate results.

4. Task Manager

In this phase, the Task Managers performs the execution of the task assign by the Job Manager.

The following figure shows the Apache Flink Execution Flow.

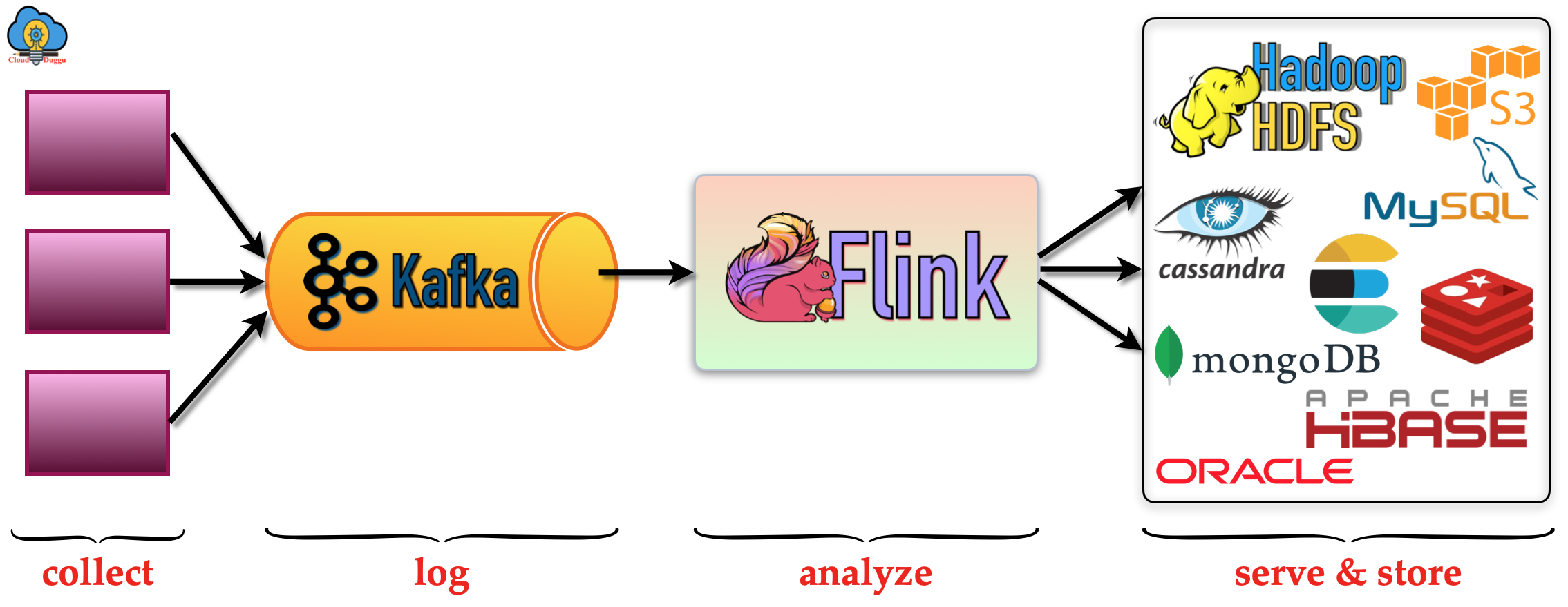

Apache Flink Pipeline

In the following figure, we can see the end-to-end overview of log collection from different sources, and Apache Flink is analyzing those logs after that is sending it further to process and storage.