The objective of this tutorial is to provide a complete overview of Hadoop YARN like YARN Architecture, YARN nodes/daemons, resource manager, and node manager.

Apache Hadoop YARN Introduction

Apache Hadoop YARN is the resource management component of a Hadoop Cluster. The responsibility of YARN is to manage resource allocation and scheduling. YARN handles resource management and job management using separate daemons. YARN is not just limited to Hadoop MapReduce, in fact, it also supports additional processing engines such as stream processing, interactive processing, in-memory processing, and so on.

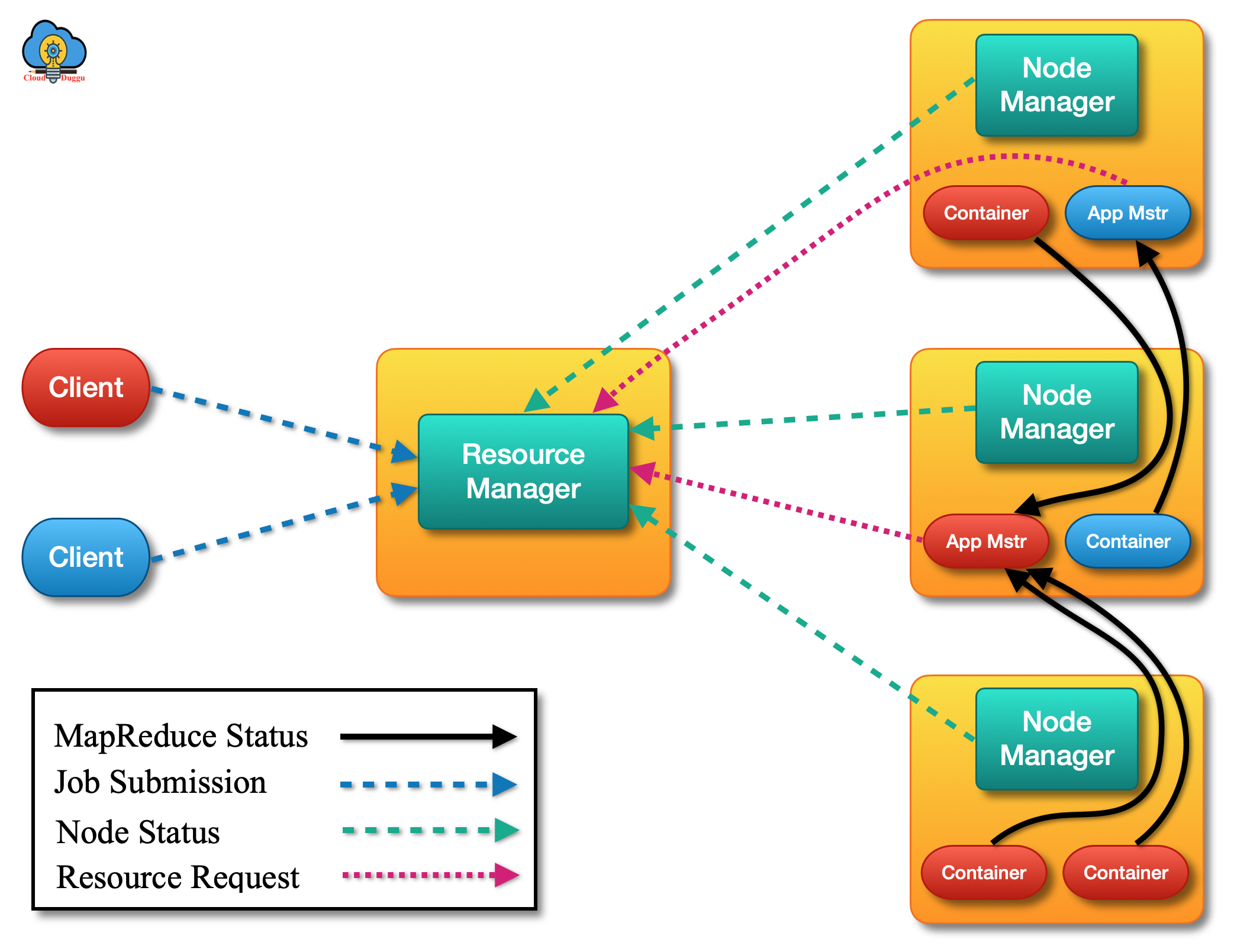

YARN Architecture

YARN Architecture provides a complete overview of the YARN resource manager and node manager. The resource manager will run on the master node and the node manager will run on the slave node.

Now let us see the resource manager and node manager in detail.

Resource Manager

Resource Manager is the Master Daemon of YARN that runs on the Master node of the Hadoop cluster. The main responsibility of the Resource Manager is to divide available system resources into applications. It is also a global resource scheduler.

The following are the two main components of the Resource Manager.

- Scheduler

- Applications Manager

1. Scheduler

The basic work of Scheduler is allocating system resources to application jobs based on the requirement such as memory, CPU, disk, network, etc. It is not responsible to monitor application jobs or restart any failed jobs. It provides pure scheduling functionality.

2. Applications Manager

Application Manager is responsible to take the job-submissions request from the user and allocate the container to execute application-specific Application Master. It is also responsible to restart the service of Application Master in case of failures.

Node Manager

The responsibility of the Node Manager is to launch and manage the containers on nodes. Node Manager runs on the slave machines and runs services to check the health of nodes. The check is done at disk level as well as user-specific test. In case, the health check for a node is failed then Node Manager Grades that node unhealthy an inform Resource Manager. After receiving communication from the Node Manager, the Resource Manager stops assigning the containers to that node. Node Manager keeps sending a heartbeat to the Resource Manager to indicate that nodes are alive.

Resource Manager Restart

Resource Manager is used to deciding resources among all the applications in the system. It is the master daemon of yarn and will run on the master node, it is potentially a single point of failure in an Apache YARN cluster. Now we will see the features of Resource Manager which improves its functioning and make it down-time ideal to end-users.

The Resource Manager has the following two types of restart.

1. Non-work-preserving RM restart In this type of restart, the status of the application, and other credentials information are stored in a pluggable state-store and the Resource Manager uses this information to restart the previous applications without user intervention.

2. Work-preserving RM restart In this type of restart, the running state of Resource Manager is reconstructed by combining the container status from NodeManagers and the container request from ApplicationMasters on restart. The main difference between Non-work-preserving RM restart and Work-preserving RM restart is that in Work-preserving RM restart the previously running application won’t be killed after Resource Manager Restart and due to this applications will not be impacted due to Resource Manager outage.

Yarn Web Application Proxy

The idea of Web Application Proxy is to prevent web-based attacks. It runs with Resource Manager and it is a part of YARN. In YARN architecture, the Application Master runs as a non-trusted user and provides the web user interface, and forwards the link to the Resource Manager whereas the Resource Manager runs as a trusted user and any link send by Application Master can create malicious issues. Hence the Web Application Proxy plays an important role by warning users to not own such an application because it is connecting to an untrusted site.

Yarn Docker Containers

Docker provides an easy-to-use interface to Linux containers with easy-to-construct image files for those containers. It allows users to bundle an application together with its selected execution environment to be executed on a target machine. Docker provides an environment in which an application can execute its code. It is separated from the execution conditions of the NodeManager and other applications.

Yarn Timeline Server

YARN Timeline Server is used to address the storage and application’s current and historic information.

It has the following two responsibilities.

1. Persisting Application Specific Information

Persisting the Application Specific Information is totally based on the application or the framework because if we take an example of MapReduce then it can include information of Map and Reduce tasks. On the other hand, developers can also provide information about the application to the Timeline server using TimelineClient.

2. Persisting Generic Information about Completed Applications

Generic information includes application-level data such as.

- Queue-name.

- The information about the user is set in the ApplicationSubmissionContext.

- The detail of application attempts.

- Information about each application-attempt.

- The list of containers runs under each application-attempt.

- Information about each container.