The objective of this tutorial is to provide a complete overview of the Hadoop HDFS component, complete information about its nodes like Namenode and data node, the architecture of HDFS, its features like distributed storage, blocks replication, high availability, and so on. We will share Hadoop HDFS command references like writing down the file from local disk to HDFS and from HDFS to local disk and other useful commands as well.

Apache Hadoop HDFS Introduction

HDFS stands for Hadoop Distributed File System which is designed to run on commodity hardware. HDFS provides the feature of fault-tolerant and it can be deployed on low-cost commodity hardware. HDFS is suitable for storing less large files rather than a huge number of small files and even in case of hardware failures, it stores data dependably. It is well suited for distributed storage and distributed processing.

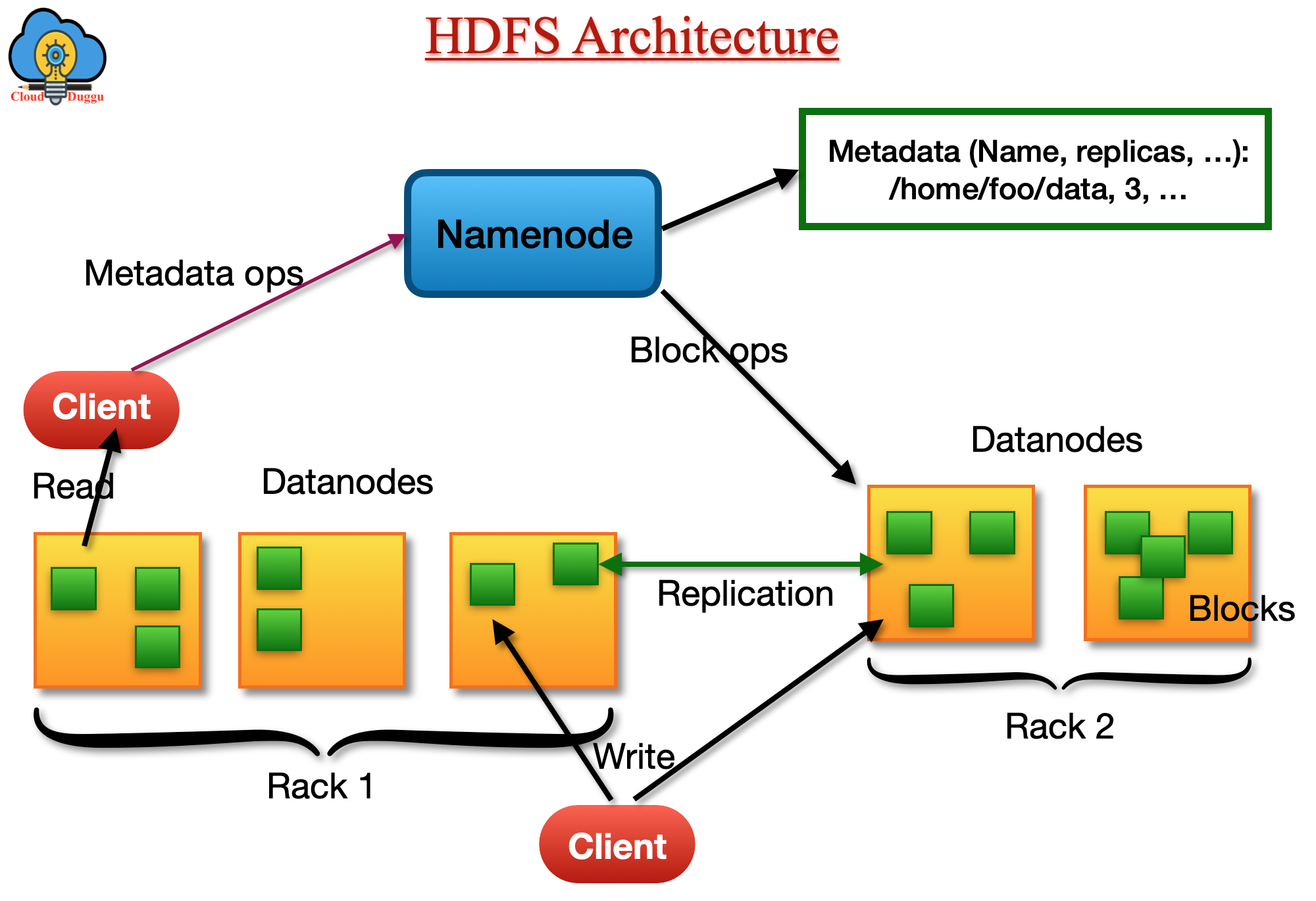

HDFS Architecture

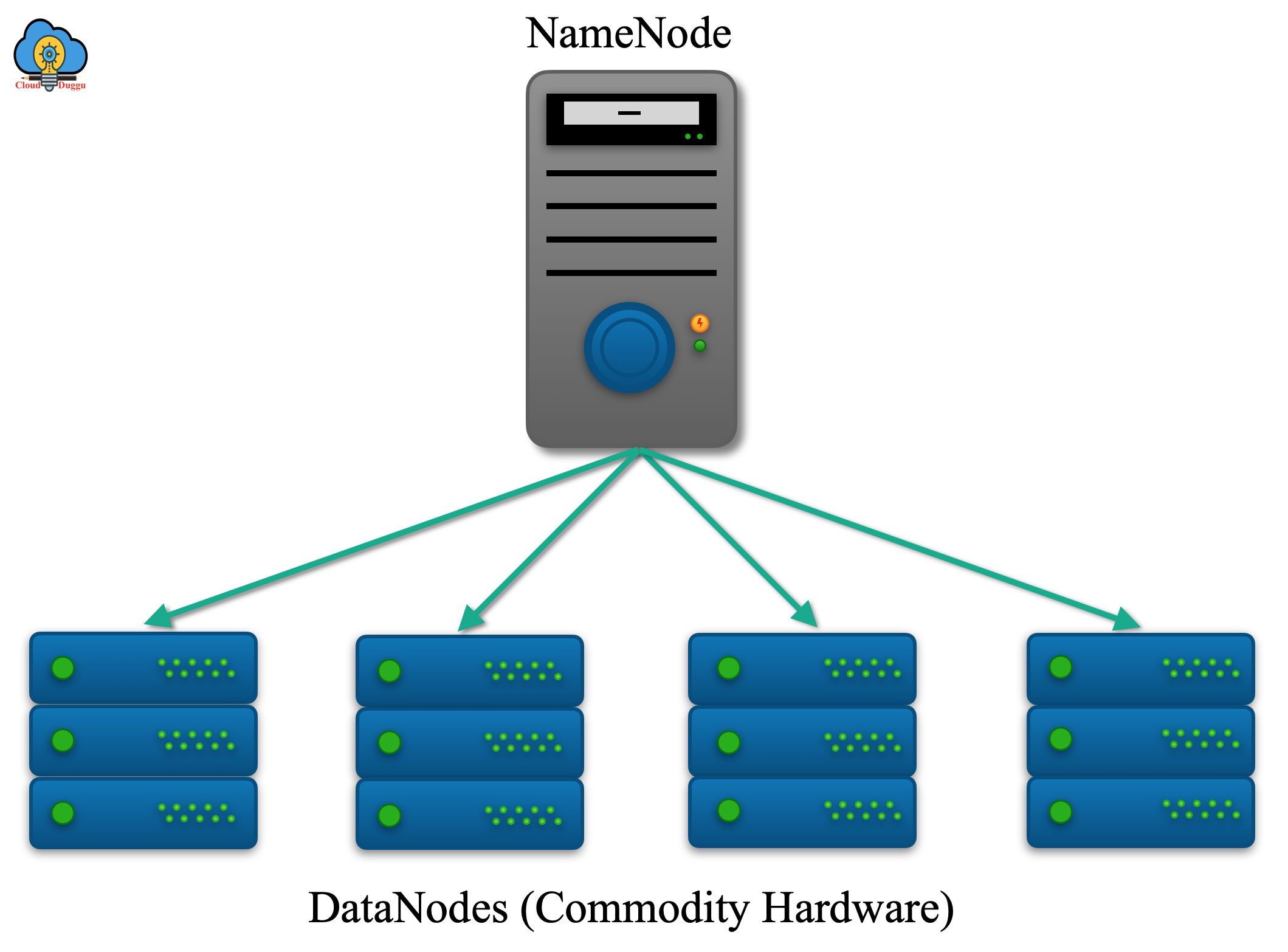

HDFS Architecture provides a complete overview of HDFS Namenode and data nodes and their functionality. Namenode will store metadata and data nodes will store actual data. The client will interact with the Namenode in the cluster to perform the task. Data nodes will keep sending a heartbeat to Namenode to indicate that it’s alive.

HDFS has master/slave architecture, on the master node we have Namenode daemons running, and on slave nodes, we have Datanode daemons running.

HDFS Namenode

HDFS Namenode daemon runs on the master node and performs the below tasks.

- Namenode process runs on the Master node.

- Namenode stores details of files like their location, permission, etc.

- Namenode will receive heartbeat from all data nodes which indicates that all data nodes are alive.

- Namenode maintains all changes done to metadata like deletion, creation, and renaming in edit logs.

HDFS Datanode

HDFS Datanode daemon runs on slave nodes and performs the below tasks.

- Datanode always runs on the slave machine.

- Datanode will store actual data and perform actions like creating new blocks, deletion of blocks.

- Datanode will process all requests received from the user.

- Datanode will keep sending heartbeat to its Namenode every 3 seconds.

HDFS Secondary NameNode

Namenode stores all modifications in a native filesystem, as a log appended to a native file system file, edits. It read the fsimage file to check the status of HDFS during its startup and apply edit logs. Edits file is very huge file because Namenode keeps merging fsimage and edits files during startup and hence in next start-up of Namenode it takes time to read such huge file.

The work of Secondary NameNode is to merges the fsimage and the edits log files periodically and keep edits log size within a limit. Secondary NameNode runs on a different machine than the primary NameNode and its memory requirements are in the same order as the primary NameNode.

Below two configuration parameters control the start of the checkpoint process on the secondary NameNode.

- dfs.namenode.checkpoint.period It is set to 1 hour by default, specifies the maximum delay between two consecutive checkpoints.

- dfs.namenode.checkpoint.txns It is set to 1 million by default, defines the number of not checkpointed transactions on the NameNode which will force an urgent checkpoint, even if the checkpoint period has not been reached.

HDFS Checkpoint Node

NameNode continues its namespace using two files: fsimage, which is the latest checkpoint of the namespace and edits, a journal (log) of changes to the namespace since the checkpoint, after starting of Namenode it merges the fsimage and edits journal to provide an up-to-date view of the file system metadata after that NameNode overwrites fsimage with the new HDFS state and begins a new edits journal.

The task of the Checkpoint node is to periodically create checkpoints of the namespace, It downloads fsimage and edits from the active NameNode, merges them locally, and uploads the new image back to the active NameNode. It runs on a different machine than the NameNode since its memory requirements are in the same order as the NameNode.It is started by bin/hdfs namenode -checkpoint on the node.

- dfs.namenode.checkpoint.period It is set to 1 hour by default, specifies the maximum delay between two consecutive checkpoints.

- dfs.namenode.checkpoint.txns It is set to 1 million by default, defines the number of not checkpointed transactions on the NameNode which will force an urgent checkpoint, even if the checkpoint period has not been reached.

HDFS Backup Node

The backup node will provide the same checkpointing functionality as the Checkpoint node; it does not need to download fsimage and edits files from the active NameNode to create a checkpoint. It has upto date status of the Namenode in memory. Its RAM requirements are the same as the NameNode because the Backup node maintains a copy of the namespace in memory. It is configured in the same manner as the Checkpoint node. We can start the Backup node using the command (bin/hdfs namenode –backup).

The location of the Backup node is configured using the dfs.namenode.backup.address and dfs.namenode.backup.http-address configuration variables.

HDFS Goals and Assumptions

Hardware Failure

Hardware Failure has become a regular tern rather than an exception. An HDFS instance may consist of hundreds or thousands of server machines and each of the machines will store part of the file system’s data so there are a huge number of components and each component has a non-trivial probability of failure that means some components of HDFS is always non-functional, so HDFS core functionality is to quickly detection of faults and automatic recovery from failure.

Streaming Data Access

An application that runs on HDFS needs streaming access to their data sets. HDFS is designed more for batch processing rather than interactive use by users, the importance is on high throughput of data access rather than low latency of data access.

Large Data Sets

HDFS supports large files so an application that runs on HDFS should have large data sets typical in gigabytes to terabytes in size. HDFS is required to provide a very high level of aggregate data bandwidth which can scale up to hundreds of nodes in a cluster.

Simple Coherency Model

Once a file is created written, and closed need not be changed except for appends and truncates because HDFS follows the write-once-read-many access model for files, a client can append the file at the end but can’t update it.

Moving Computation is Cheaper than Moving Data

Applications that are performing computation near the data are more efficient than data is traveling into memory for computation and when the size of data is high then moving computation near data is cheaper than moving data in machine memory for computation. HDFS provides an interface for applications to its code near data.

Portability Across Heterogeneous Hardware and Software Platforms

HDFS has been designed in a way that it can be easily portable from one platform to another. This feature of HDFS promotes the broad selection of HDFS as a platform of opportunity for a very large set of applications.

HDFS Features

Let us understand the important features of the Hadoop Distributed File System.

Data Replication

HDFS file system is developed in a way that it can store very large data sets across a cluster of nodes. Each file is stored as a sequence of the block in the HDFS file system. Its replication feature replicates each block on multiple nodes to provide a fault-tolerant feature. A user can configure the replication and block size per requirement.

HDFS Block

Block is the smallest contiguous storage allocated to a file and the HDFS file system is developed to store very large files. The applications which are having very huge data write data on HDFS once and read multiple times because HDFS provides the features to write once and read many times. In HDFS idle block size is 128 MB.

Distributed Storage

HDFS divides data into small chunks and stores it on different DataNodes in the cluster and this way when a client submits a map-reduce job the code runs on different machines to achieve parallelism.

High Availability

HDFS achieves high availability by storing and replicating data on multiple nodes of the cluster. The default replication factor is 3 which can be changed per requirement in the HDFS configuration file.

Fault Tolerance

HDFS store data in commodity hardware and it has higher chances to crash so HDFS store and replicate data on multiple nodes of the cluster to achieve fault tolerance.

High Data Reliability

Due to the replication factor of HDFS data blocks are stored on different data nodes, even in case of node failures data is highly available on other nodes and in case of rack failure, data will still be available in another rack.

HDFS Scalability

HDFS cluster can be scale by adding more disk which is called vertical scalability, in this scalability downtime is required cause we need to edit the configuration file and add the corresponding disk in that. Another way of scalability is horizontal scaling, in this scalability nodes can be added on the fly and no downtime is required.

HDFS Data Read and Write Operations

Let us understand HDFS data read and write operations. In HDFS once data is stored it can’t be edited but data can be appended at the end of that file. When the client interacts with the Namenode, the Namenode provides permission to the client and share details of data nodes to perform read and write operations.

Let us understand HDFS read operation.

HDFS Data Write Operation

A client will submit a written request to Namenode, Namenode will share complete details of data nodes, now the client will start writing down data on data nodes in this process data node will create a write pipeline to write data.

Let us understand it with the below steps.

- A Client will send a create request to DistributedFileSystem APIs.

- Namenode will receive a remote procedure call from DistributedFileSystem API to create a new file in the file system’s namespace. Now Namenode verifies if the file name is already there or not, if the file name is there then the Namenode sends an error message to the client.

- DistributedFileSystem uses FSDataOutputStream to write data for the client. DFSOutputStream will split client data into packets and write into data queue after that DataStreamer use data queue and ask Namenode to allocate new blocks from the list of data nodes to store replication of blocks.

- If the replication factor is 3 so we have 3 nodes in the pipeline to write data. Now DataStreamer will send the packet to the first data node which will store the packet and forward it to the second node, now the second node will store the data and forward it to the third node.

- There will an ack queue which will maintain an internal queue of packets and waiting to be acknowledged by namenode. Ack queue is maintained by DFSOutputStream. Once namenode will acknowledge the packet it will be removed from the ack queue. Datanode will send acknowledgment once data is written on all nodes (replication 3).

- Once data is written a client will call close() stream. This process will push the remaining packets to the data node pipeline and wait for an acknowledgment before telling the Namenode that the file is complete. Namenode aware of file blocks so it has to wait for block replication and successful notification.

HDFS Data Read Operation

Let us understand it with the below steps.

- A client will put a request to read a file by calling open () stream after that DistributedFileSystem calls the namenode by using Remote Procedure Call to know the location of blocks. Now namenode returns the address of data nodes that have a copy of blocks.

- After this, DistributedFileSystem will return FSDataInputStream from which a client will read data when the client will make a read () request post that DFSInputStream which will store the address of data nodes will connect to the data node closest in the file.

- After calling read () data will flow from data nodes to the client machine, once data is copied DFSInputStream will close the connection to the data node and find the next data node for other blocks. If DFSInputStream finds any corrupted blocks then it will check the closest block and report that corrupted block to namenode.

- After completion of the reading process client call close() stream.

HDFS Top 50 Commands

In this tutorial, we will see HDFS commands to manage file listing, read /write operations, upload/download files, file management, ownership management, filesystem management, and administration Commands.

HDFS File Listing Commands

Let us understand file listing commands.

File Listing Commands

| Commands | Description |

|---|---|

| $/hadoop/bin/hdfs dfs -ls / | This command will list all the files/directories for the given hdfs destination path. |

| $/hadoop/bin/hdfs dfs -ls -d /hadoop | This command will list directories as plain files. |

| $/hadoop/bin/hdfs dfs -ls -h /data | This command will format file sizes in a human-readable fashion. |

| $/hadoop/bin/hdfs dfs -ls -R /hadoop | This command will recursively list all files in hadoop directory and all subdirectories in the hadoop directory. |

| $/hadoop/bin/hdfs dfs -ls /hadoop/word* | This command will list all the files matching the pattern. In this case, it will list all the files inside the hadoop directory which starts with 'word'. |

Read and Write Files Commands

| Commands | Description |

|---|---|

| $/hadoop/bin/hdfs dfs -text /hadoop/date.log | This HDFS Command takes a source file and outputs the file in text format on the terminal. The zip and TextRecordInputStream are the supported formats. |

| $/hadoop/bin/hdfs dfs -cat /hadoop/data | This command will display the content of the HDFS file data on your stdout . |

| $/hadoop/bin/hdfs dfs -appendToFile /home/cloudduggu/ubuntu/data1 /hadoop/data2 | This command will Appends the content of a local file data1 to a hdfs file data2. |

Upload/Download Files Commands

| Commands | Description |

|---|---|

| $/hadoop/bin/hdfs dfs -put /home/cloudduggu/ubuntu/data /hadoop | This command will copy the file from local file system to HDFS. |

| "$/hadoop/bin/hdfs dfs -put -f /home/cloudduggu/ubuntu/data /hadoop " | This command will copy the file from the local file system to HDFS, and in case the local already exists in the given destination path. If we use –f option with the put command then it will overwrite it. |

| $/hadoop/bin/hdfs dfs -put -l /home/cloudduggu/ubuntu/sample /hadoop | This command will copy the file from local file system to HDFS.Forces replication factor of 1. |

| $/hadoop/bin/hdfs dfs -put -p /home/cloudduggu/ubuntu/sample /hadoop | This command will copy the file from local file system to HDFS. If we pass with -p option then it will maintain access and modification times, ownership, and mode. |

| $/hadoop/bin/hdfs dfs -get /newfile /home/cloudduggu/ubuntu/ | This command will copy the file from HDFS to local file system. |

| $/hadoop/bin/hdfs dfs -get -p /newfile /home/cloudduggu/ubuntu/ | This command will copy the file from HDFS to local file system. If we pass with -p option then it will maintain access and modification times, ownership, and mode. |

| $/hadoop/bin/hdfs dfs -get /hadoop/*.log /home/cloudduggu/ubuntu/ | This command will copy all the files matching the pattern from local file system to HDFS. |

| $/hadoop/bin/hdfs dfs -copyFromLocal /home/cloudduggu/ubuntu/sample /hadoop | This command works similarly to theputcommand, except that the source is restricted to a local file reference. |

| $/hadoop/bin/hdfs dfs -copyToLocal /newfile /home/cloudduggu/ubuntu/ | This command works similarly to the put command, except that the destination is restricted to a local file reference. |

| $/hadoop/bin/hdfs dfs -moveFromLocal /home/cloudduggu/ubuntu/sample /hadoop | This command works similarly to the put command, except that the source is deleted after it's copied. |

File Management Commands

| Commands | Description |

|---|---|

| $/hadoop/bin/hdfs dfs -cp /hadoop/logbkp /hadoopbkp | This command will copy file from source to destination on HDFS. In this case, copyinglogbkp from hadoop directory to hadoopbkp directory. |

| $/hadoop/bin/hdfs dfs -cp -p /hadoop/logbkp /hadoopbkp | This command will copy file from source to destination on HDFS. If we pass with -p option then it will maintain access and modification times, ownership, and mode. |

| $/hadoop/bin/hdfs dfs -cp -f /hadoop/logbkp /hadoopbkp | This command will copy file from source to destination on HDFS. If we pass with -f option then it will overwrite. |

| $/hadoop/bin/hdfs dfs -mv /hadoop/logbkp /hadoopbkp | This command will move files that match the specified file pattern

|

| $/hadoop/bin/hdfs dfs -rm /hadoop/logbkp | This command will delete the file (sends it to the trash). |

| $/hadoop/bin/hdfs dfs -rm -r /hadoop | This command will delete the directory and any content under it recursively. |

| $/hadoop/bin/hdfs dfs -rm -R /hadoop | This command will delete the directory and any content under it recursively. |

| $/hadoop/bin/hdfs dfs -rmr /hadoop | This command will delete the directory and any content under it recursively. |

| $/hadoop/bin/hdfs dfs -rm -skipTrash /hadoop | The-skipTrash option will bypass trash, if enabled, and delete the specified file(s) immediately. |

| $/hadoop/bin/hdfs dfs -rm -f /hadoop | If the file does not exist, do not display a diagnostic message or modify the exit status to reflect an error. |

| $/hadoop/bin/hdfs dfs -rmdir /hadoopbkp | This command will delete a directory. |

| $/hadoop/bin/hdfs dfs -mkdir /hadoopbkp1 | This command will create a directory in specified HDFS location. |

| $/hadoop/bin/hdfs dfs -mkdir -f /hadoopbkp2 | This command will create a directory in specified HDFS location. In case if there is a directory then also this command will not fail. |

| $/hadoop/bin/hdfs dfs -touchz /hadoopbkp3 | This command will create a file of zero length at

|

Ownership and Validation Commands

| Commands | Description |

|---|---|

| $/hadoop/bin/hdfs dfs -checksum /hadoop/logfile | This command will dump checksum information for files that match the file pattern

|

| $/hadoop/bin/hdfs dfs -chmod 755 /hadoop/logfile | This command will changes permissions of the file. |

| $/hadoop/bin/hdfs dfs -chmod -r 755 /hadoop | This command will changes permissions of the files recursively. |

| $/hadoop/bin/hdfs dfs -chown ubuntu:ubuntu /hadoop | This command will change the owner of the file. |

| $/hadoop/bin/hdfs dfs -chown -r ubuntu:ubuntu /hadoop | This command will changes the owner of the files recursively. |

| $/hadoop/bin/hdfs dfs -chgrp ubuntu /hadoop | This command will change the group association of the file. |

| $/hadoop/bin/hdfs dfs -chgrp -r ubuntu /hadoop | This command will changes the group association of the files recursively. |

Filesystem Commands

| Commands | Description |

|---|---|

| $/hadoop/bin/hdfs dfs -df /hadoop | This command will show the capacity, free and used space of the filesystem. |

| $/hadoop/bin/hdfs dfs -df -h /hadoop | This command will show the capacity, free and used space of the filesystem. The option -h is used to formate the size of the file in a readable format. |

| $/hadoop/bin/hdfs dfs -du /hadoop/logfile | It will show the space consumed by files. |

| $/hadoop/bin/hdfs dfs -du -s /hadoop/logfile | Rather than showing the size of each individual file that matches the pattern, shows the total (summary) size. |

| $/hadoop/bin/hdfs dfs -du -h /hadoop/logfile | This command will show the amount of space, in bytes, used by the files that match the specified file pattern. |

Administration Commands

| Commands | Description |

|---|---|

| $/hadoop/bin/hdfs balancer-threshold 30 | This command runs a cluster balancing utility.Percentage of disk capacity. This overwrites the default threshold. |

| hadoop version | It will show the version of Hadoop. |

| $/hadoop/bin/hdfs fsck / | This command checks the health of the Hadoop file system. |

| $/hadoop/bin/hdfs dfsadmin-safemode leave | The command to turn off the safemode of NameNode. |

| $/hadoop/bin/hdfs dfsadmin -refreshNodes | This command is used to read the host file and refresh the Namenode. |

| $/hadoop/bin/hdfs namenode -format | This command formats the NameNode. |

HDFS Data Replication

Apache Hadoop HDFS is aimed to store very large files dependably across machines in a large cluster and it stores each file as a sequence of blocks and those blocks are replicated for fault tolerance. NameNode decides replication of blocks. It seldom gets a Heartbeat and a Blockreport from each of the DataNodes in the cluster. Acceptance of a Heartbeat implies that the DataNode is working properly.

In most of the cases when the replication factor is defined as three then the HDFS assignment policy puts one replica of the block in the local system if a writer is on a data node, else, it will put on a random node in the same rack as the writer. The second replicate is put on a different rack and the third replica is put on a different node in the same remote rack. In case the replicate factor is more than 3 then the assignment of 4th are determined randomly while keeping the number of replicas per rack below the upper limit (which is basically (replicas - 1) / racks + 2) because the NameNode does not allow DataNodes to have multiple replicas of the same block. The total number of replicas is followed by the number of Data nodes.

HDFS Disk Balancer

Apache Hadoop HDFS disk balancer is a tool used to allocate even data on all disk of data nodes. Data is spread unequally between disks and this can happen due to a large number of writes and deletes or due to a disk replacement. To resolve this HDFS disk balancer creates a plan which decides how much data should move in between disks. Disk Balancer will not restrict other processes since it controls how much data is copied every second.

Disk Balancer can be enabled by setting parameter dfs.disk.balancer.enabled true in hdfs-site.xml.