Apache Pig Latin provides the below list of group and joins operators.

Let us see each operator in detail.

1. GROUP Operator

The GROUP operator performs the grouping of the same group key tuples. After processing the generated output is a relation that has a tuple per group.

Syntax:

grunt> alias = GROUP alias { ALL | BY exp} [, alias ALL | BY exp …] [USING 'collected' | 'merge'] [PARTITION BY partit] [PARALLEL n];

To perform GROUP operation, first, we will load a file name “employee.txt” in HDFS “/pigexample/” location. The content of the file is mentioned below.

1001,James,Butt,New Orleans,Orleans

1002,Josephine,Darakjy,Brighton,Livingston

1003,Art,Venere,Bridgeport,Gloucester

1004,Lenna,Paprocki,Anchorage,Anchorage

1005,Donette,Foller,Hamilton,Butler

1006,Simona,Morasca,Ashland,Ashland

1007,Mitsue,Tollner,Chicago,Cook

1008,Leota,Dilliard,San Jose,Santa

1009,Sage,Wieser,Sioux Falls,Minnehaha

Command:

grunt> employees = LOAD '/pigexample/employee.txt' USING PigStorage(',') as (empid:int,firstname:chararray,lastname:chararray,city:chararray,county:chararray );

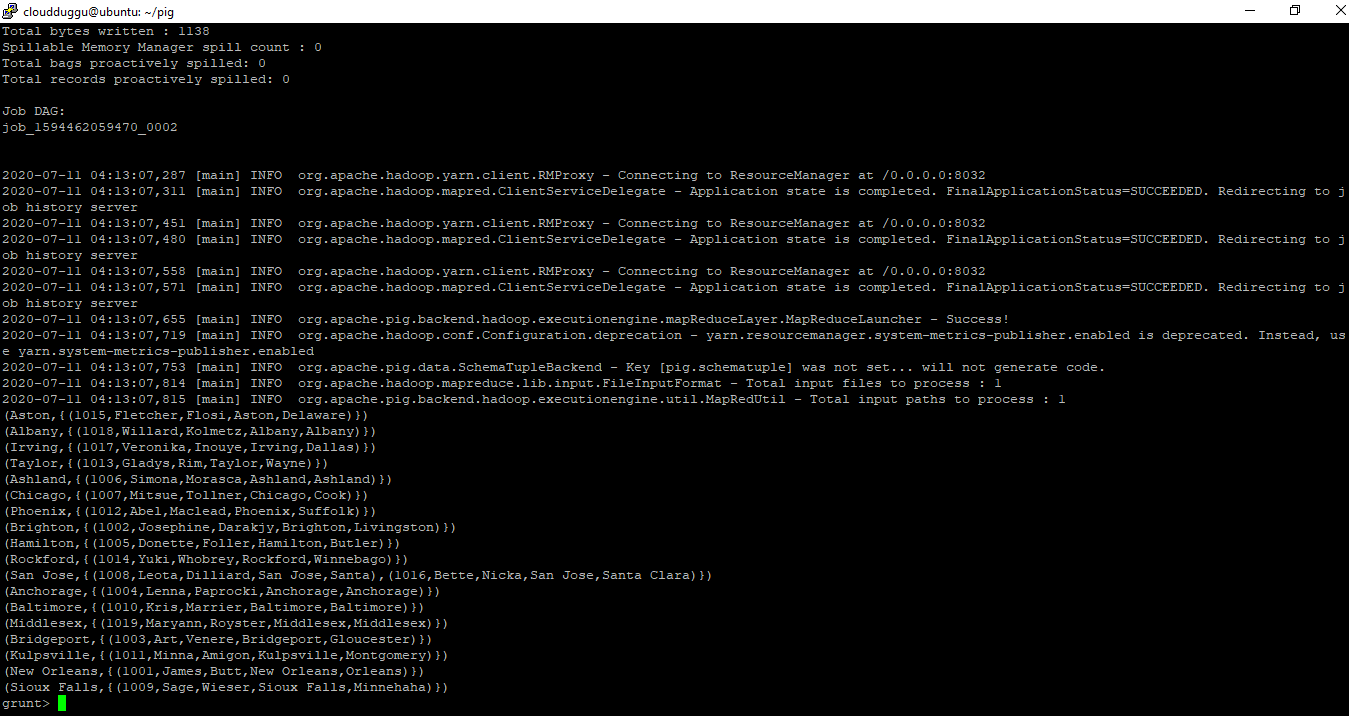

a. GROUP by Single column

Will use the GROUP operator to perform grouping on schema “employees” by city.

After that, we will use the DUMP operator to print the records on the terminal.

Command:

grunt> groupdata = GROUP employees by city;

grunt> DUMP groupdata;

Output:

We can see from the output that two columns are generated.

- The first column is a city that we used to group the data.

- The second column is showing the city-wise data.

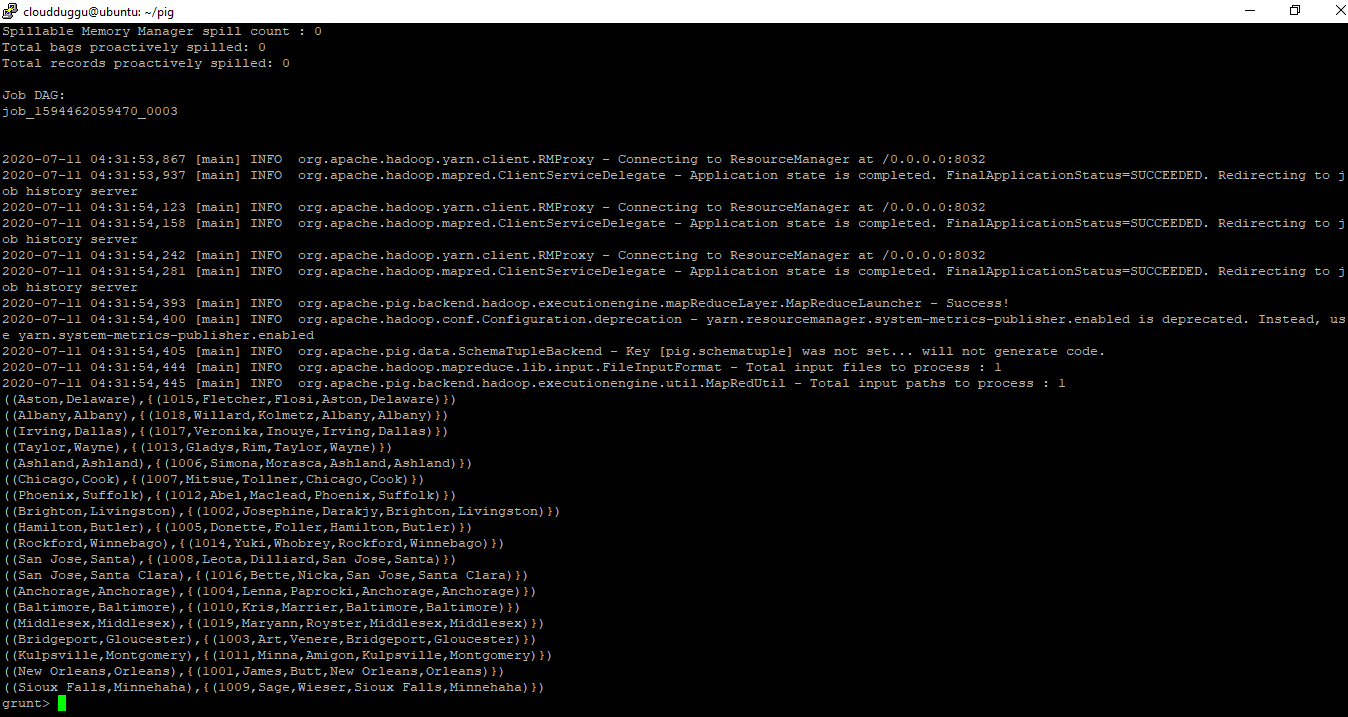

b. GROUP by Multi-Column

We will use the GROUP operator to perform grouping on relation “employees” by city and county.

After that, we will use the DUMP operator to print the records on the terminal.

Command:

grunt> groupdata = GROUP employees by (city,county);

grunt> DUMP groupdata;

Output:

2. COGROUP Operator

2. COGROUP Operator

COGROUP operators are similar to the GROUP operator in which the GRPOUP operators works on one relation on the other hand the COGROUP operators work on the two and more relations.

Syntax:

grunt> alias = COGROUP alias { ALL | BY exp} [, alias ALL | BY exp …] [USING 'collected' | 'merge'] [PARTITION BY partit] [PARALLEL n];

To perform COGROUP operation we have taken two datasets “employee.txt” and “department.txt”. We will put both files in HDFS location “/pigexample/”.

Content of “employee.txt”:

1001,James,Butt,New Orleans,Orleans

1002,Josephine,Darakjy,Brighton,Livingston

1003,Art,Venere,Bridgeport,Gloucester

1004,Lenna,Paprocki,Anchorage,Anchorage

1005,Donette,Foller,Hamilton,Butler

1006,Simona,Morasca,Ashland,Ashland

1007,Mitsue,Tollner,Chicago,Cook

1008,Leota,Dilliard,San Jose,Santa

1009,Sage,Wieser,Sioux Falls,Minnehaha

Content of “department.txt”:

1001,James,Butt,New Orleans,Orleans

1002,Josephine,Darakjy,Brighton,Livingston

1003,Art,Venere,Bridgeport,Gloucester

1004,Lenna,Paprocki,Anchorage,Anchorage

1005,Donette,Foller,Hamilton,Butler

1006,Simona,Morasca,Ashland,Ashland

1007,Mitsue,Tollner,Chicago,Cook

1008,Leota,Dilliard,San Jose,Santa

1009,Sage,Wieser,Sioux Falls,Minnehaha

We will load both data files from the local filesystem into HDFS “/pigexample/” using the below commands.

Command:

$hadoop fs -copyFromLocal /home/cloudduggu/pig/tutorial/employee.txt /pigexample/

$hadoop fs -copyFromLocal /home/cloudduggu/pig/tutorial/department.txt /pigexample/

Now we will create relations for both files and load data from HDFS to Pig.

Command:

grunt> empdata = LOAD '/pigexample/employee.txt' USING PigStorage(',') as (empid:int,firstname:chararray,lastname:chararray,city:chararray,county:chararray );

grunt> deptdata = LOAD '/pigexample/department.txt' USING PigStorage(',') as (empid:int,firstname:chararray,lastname:chararray,state:chararray,zip:int );

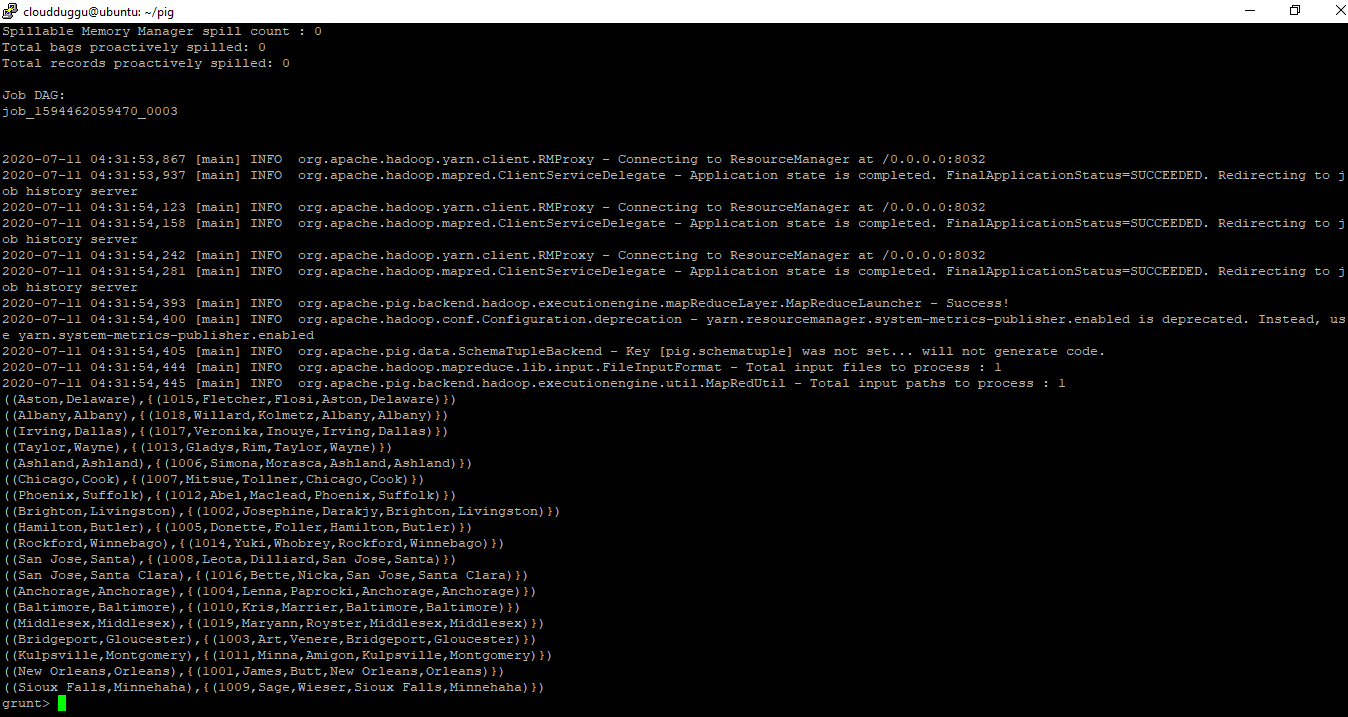

We will group the records/tuples of the relation “empdata” and “deptdata” using city and state and print the result on the terminal using the DUMP operator.

Command:

grunt> cogroupdata = COGROUP empdata by city, deptdata by state;

grunt> DUMP cogroupdata;

Output:

COGROUP operator will group the tuples from each relation according to city and state and print the result.

3. JOIN Operator

The JOIN operators are used to JOIN two or more relations to get a desirable result. To perform the JOIN operation we declare one or more tuples from each relation.

Pig supports the below list of JOIN operators.

Let us see each JOIN operator in detail.

To perform the JOIN operation we have taken two datasets “employee.txt” and “invoice.txt”. We will put both files in HDFS location “/pigexample/” from the local file system.

Content of “employee.txt”:

1001,James,Butt,New Orleans,Orleans

1002,Josephine,Darakjy,Brighton,Livingston

1003,Art,Venere,Bridgeport,Gloucester

1004,Lenna,Paprocki,Anchorage,Anchorage

1005,Donette,Foller,Hamilton,Butler

1006,Simona,Morasca,Ashland,Ashland

1007,Mitsue,Tollner,Chicago,Cook

1008,Leota,Dilliard,San Jose,Santa

1009,Sage,Wieser,Sioux Falls,Minnehaha

Content of “invoice.txt”:

1002,2019-10-08 00:00:00,23000

1001,2012-10-08 00:00:00,23500

1001,2012-11-20 00:00:00,13260

1002,2011-05-20 00:00:00,56030

1002,2011-10-08 00:00:00,34200

1001,2010-10-08 00:00:00,32300

1005,2001-11-20 00:00:00,12360

1006,2001-05-20 00:00:00,32450

We will load both data files from the local filesystem into HDFS “/pigexample/” using the below commands.

Command:

$hadoop fs -copyFromLocal /home/cloudduggu/pig/tutorial/employee.txt /pigexample/

$hadoop fs -copyFromLocal /home/cloudduggu/pig/tutorial/invoice.txt /pigexample/

Now we will create relations for both files and load data from HDFS to Pig.

Command:

grunt> empdata = LOAD '/pigexample/employee.txt' USING PigStorage(',') as (empid:int,firstname:chararray,lastname:chararray,city:chararray,county:chararray );

grunt> invdata = LOAD '/pigexample/invoice.txt' USING PigStorage(',') as (custid:int,data:chararray,amount:int );

Now we are good to perform JOIN operations on these two datasets.

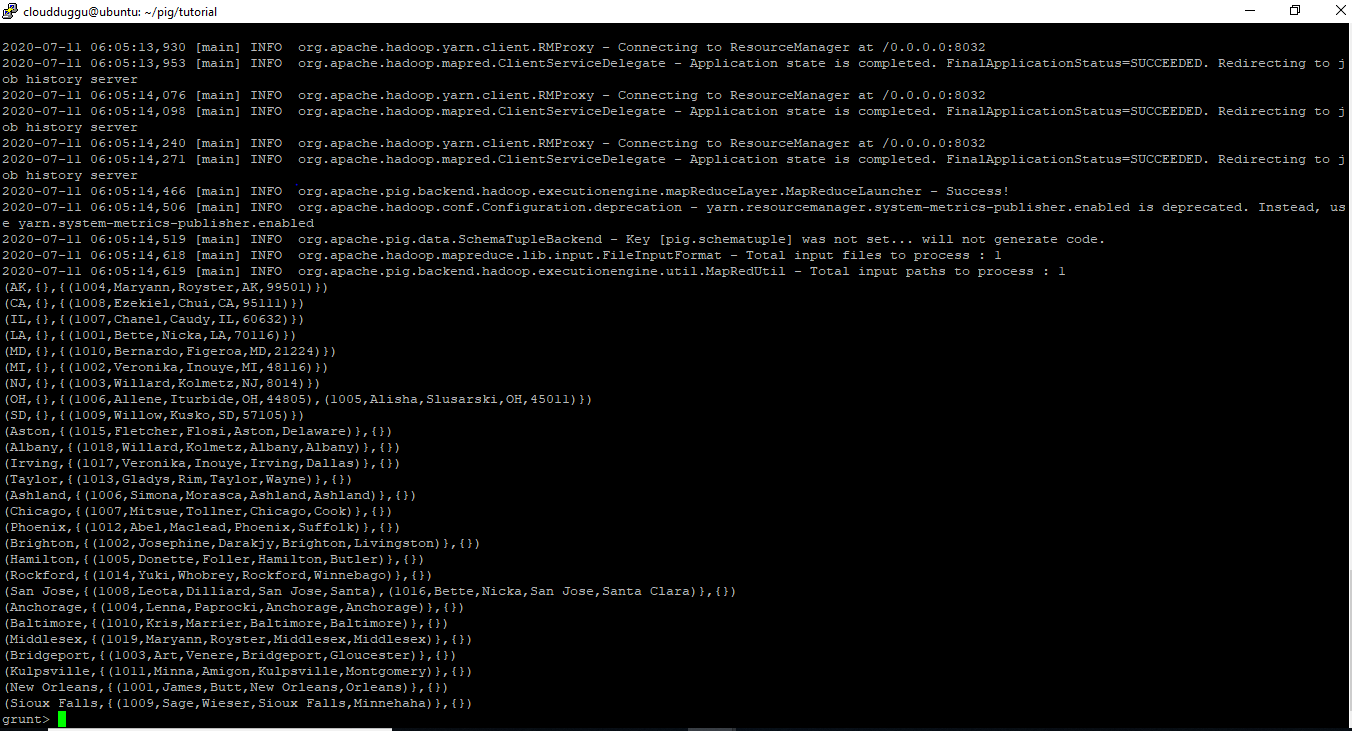

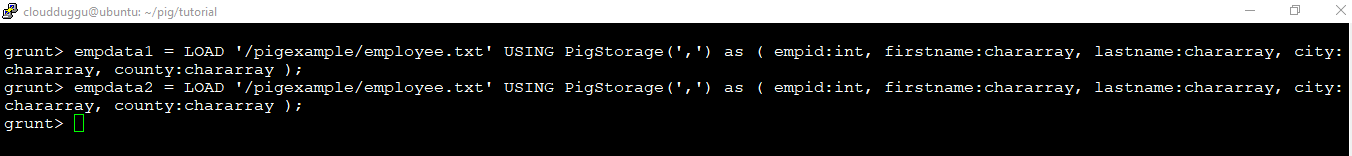

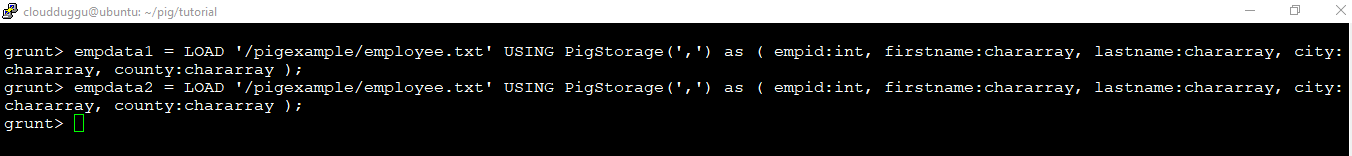

3.1 Self-Join

Self-JOIN performs the joining on a single table by creating multiple aliases. To perform Self-JOIN, we will load “employee.txt” data two times in different aliases.

Syntax:

grunt> alias = JOIN alias BY {expression|'('exp [, exp …]')'} (, alias BY {expression|'('exp [, exp …]')'} …) [USING 'replicated' | 'bloom' | 'skewed' | 'merge' | 'merge-sparse'] [PARTITION BY partit] [PARALLEL n];

Command:

grunt> empdata1 = LOAD '/pigexample/employee.txt' USING PigStorage(',') as (empid:int,firstname:chararray,lastname:chararray,city:chararray,county:chararray );

grunt> empdata2 = LOAD '/pigexample/employee.txt' USING PigStorage(',') as (empid:int,firstname:chararray,lastname:chararray,city:chararray,county:chararray );

Output:

grunt> empdata2 = LOAD '/pigexample/employee.txt' USING PigStorage(',') as (empid:int,firstname:chararray,lastname:chararray,city:chararray,county:chararray );

Output:

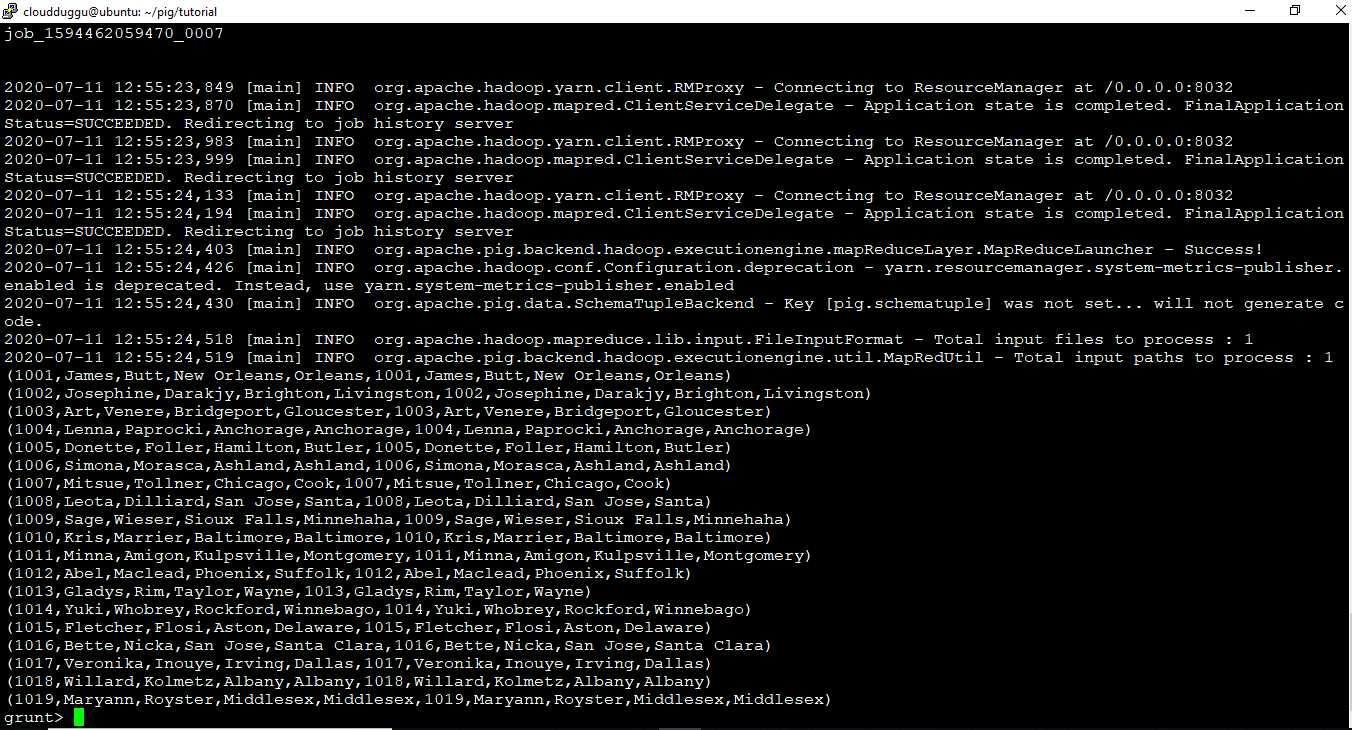

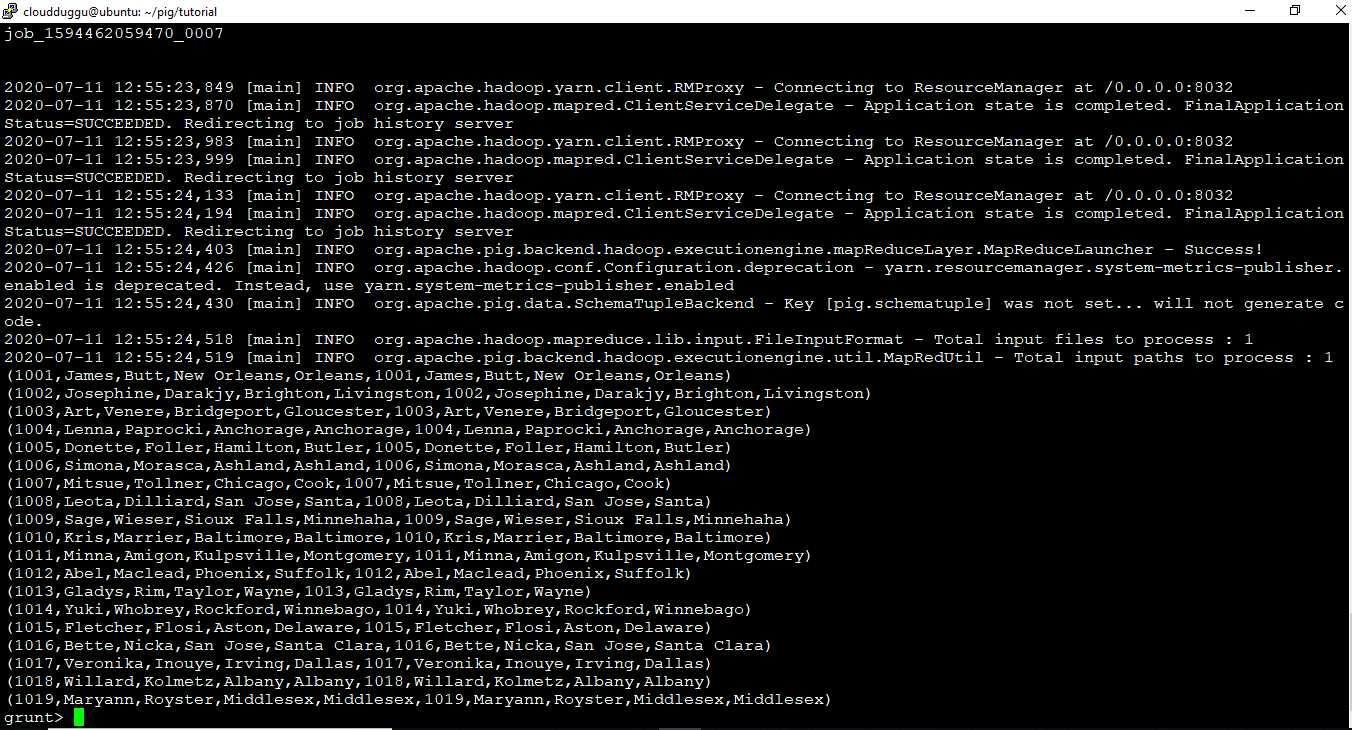

Now we will perform the Self-JOIN operation by joining two relations (empdata1 and empdata2) and print the output on the terminal using the DUMP operator.

Command:

grunt> empdata3 = JOIN empdata1 BY empid, empdata2 BY empid;

grunt> DUMP empdata3;

Output:

3.2 Inner-Join

3.2 Inner-Join

Inner-JOIN or Equi-JOIN is used to perform the joining of two or more relations based on common field values. Inner-JOIN ignores null keys.

Syntax:

grunt> alias = JOIN alias BY {expression|'('expression [, expression …]')'} (, alias BY {expression|'('expression [, expression …]')'} …) [USING 'replicated' | 'bloom' | 'skewed' | 'merge' | 'merge-sparse'] [PARTITION BY partitioner] [PARALLEL n];

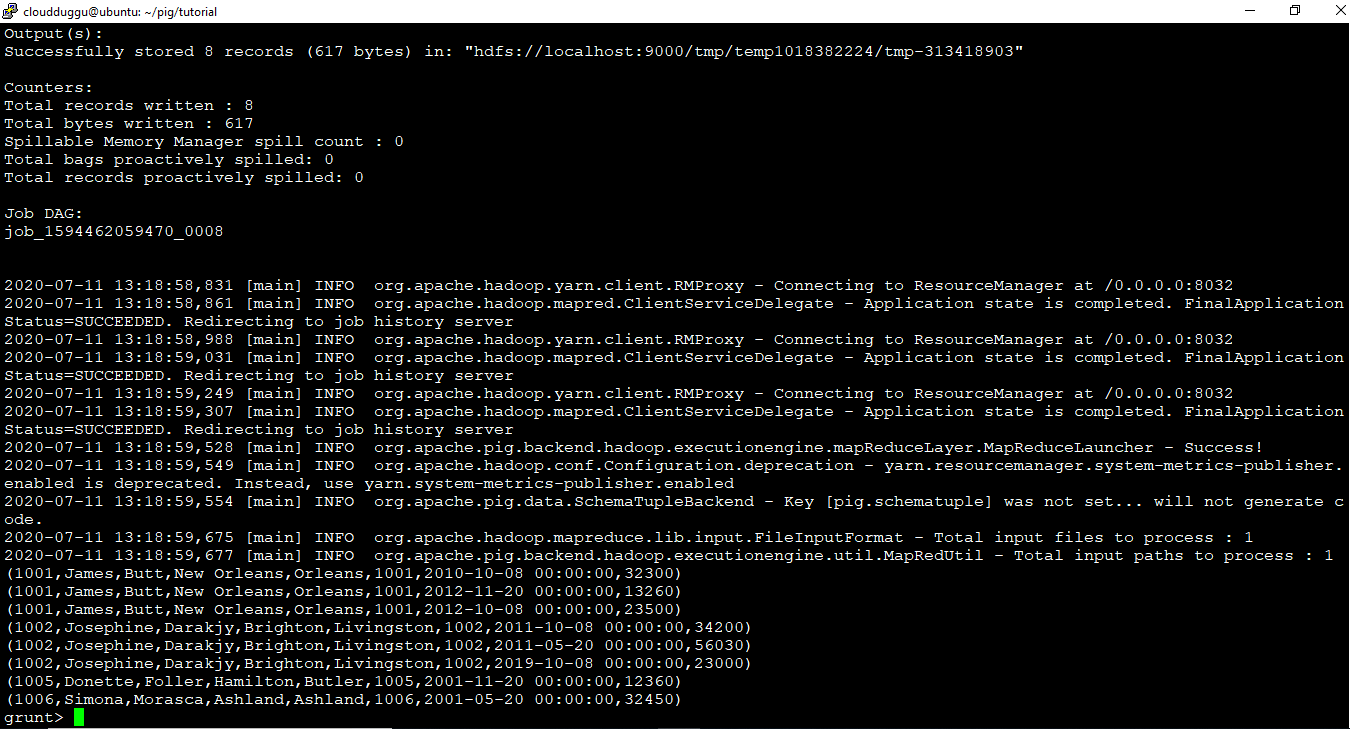

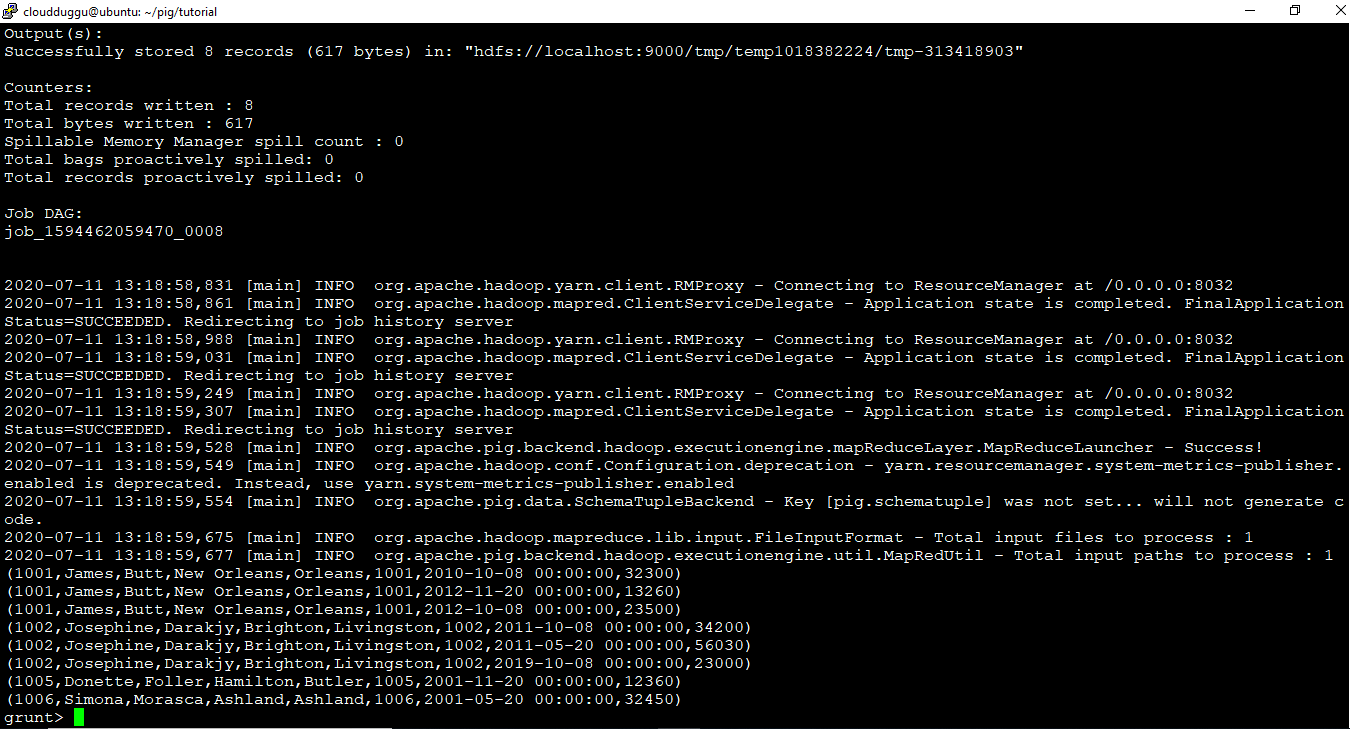

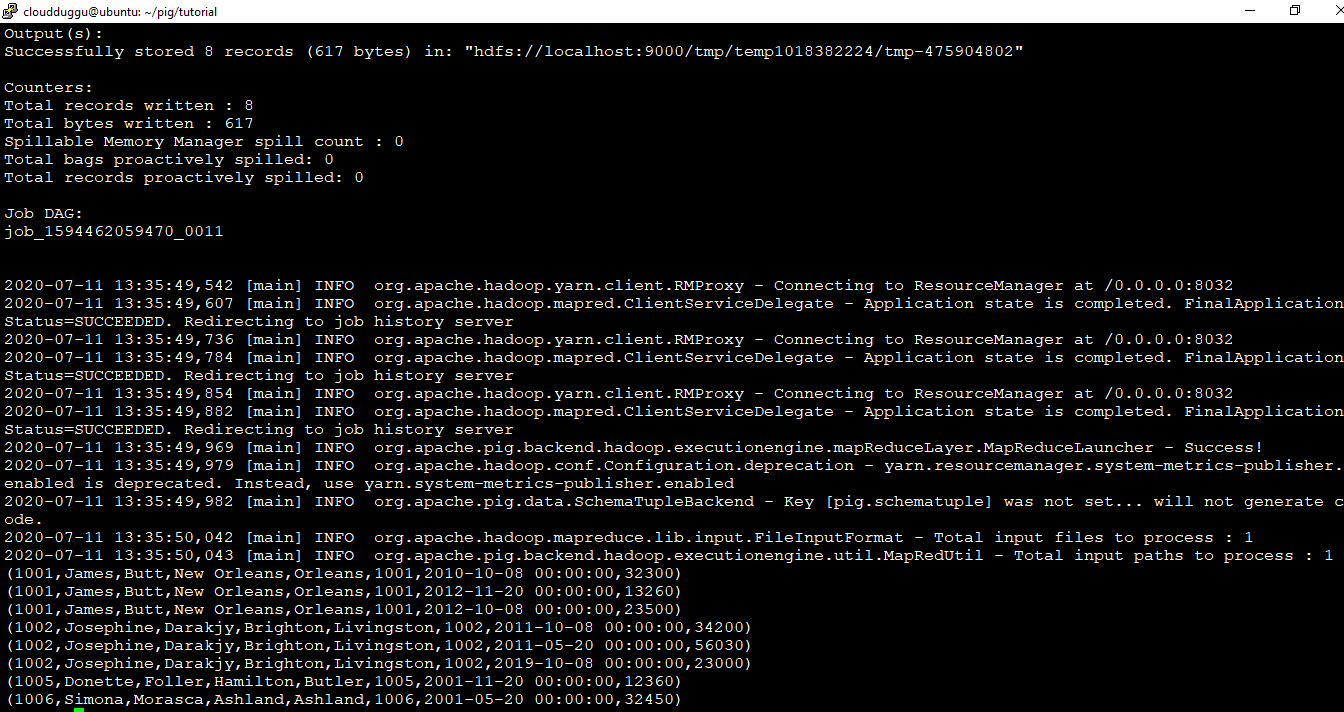

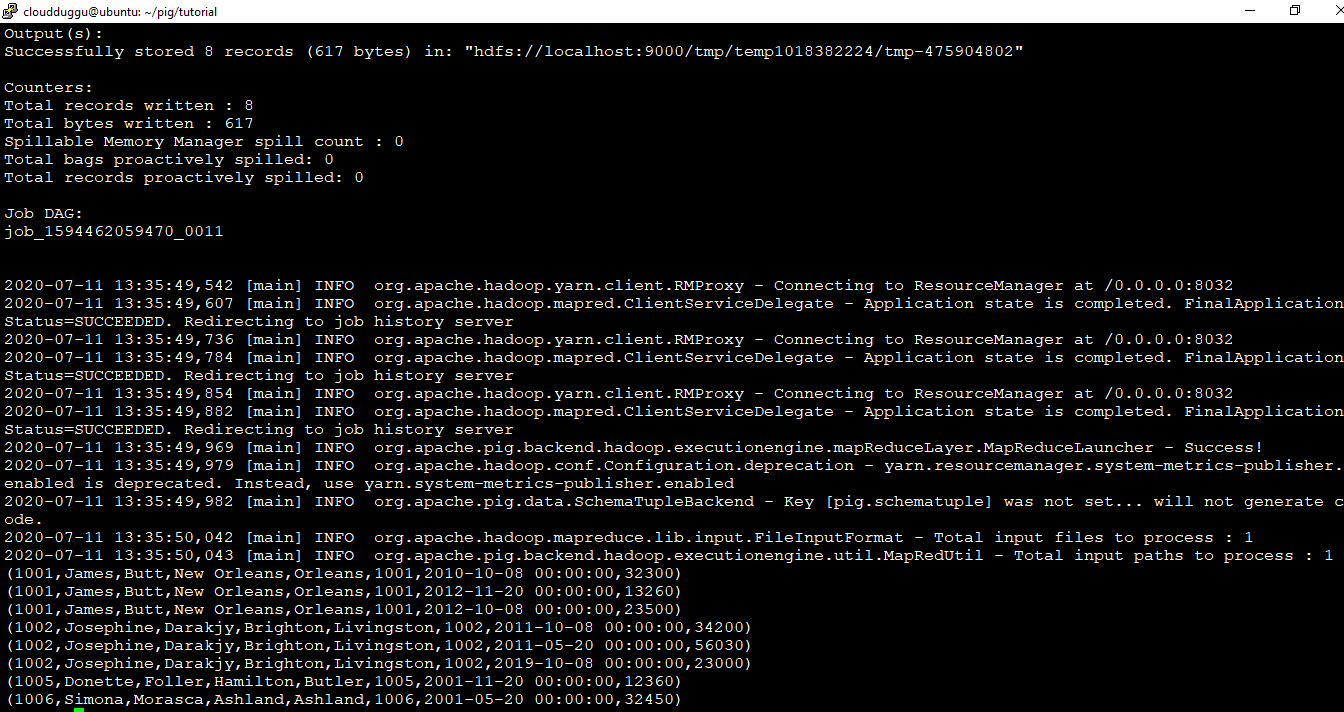

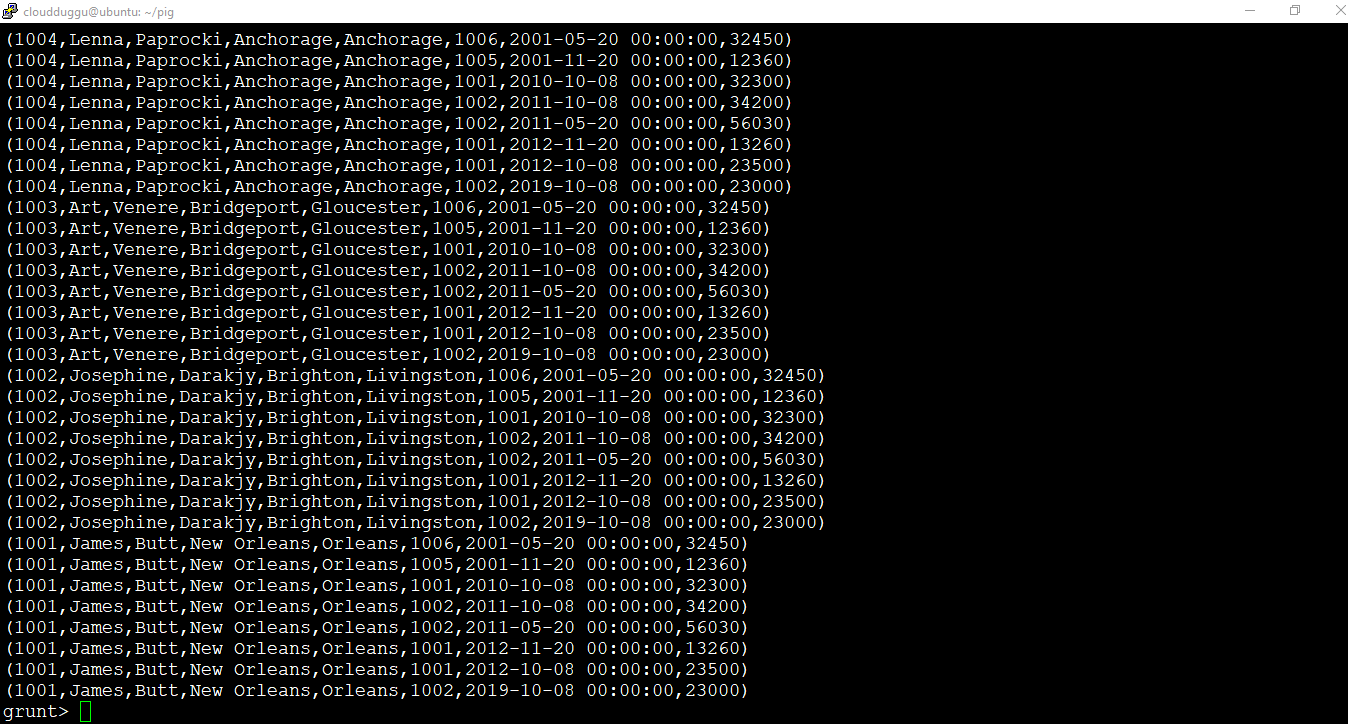

Now we will perform Inner-JOIN operation on two relations “empdata” and “invdata” and we will print output on-screen using the DUMP operator.

Command:

grunt> empdata = LOAD '/pigexample/employee.txt' USING PigStorage(',') as (empid:int,firstname:chararray,lastname:chararray,city:chararray,county:chararray );

grunt> invdata = LOAD '/pigexample/invoice.txt' USING PigStorage(',') as (custid:int,data:chararray,amount:int );

grunt> empinvoice = JOIN empdata BY empid, invdata BY custid;

grunt> DUMP empinvoice;

Output:

3.3 Left Outer-Join

3.3 Left Outer-Join

Left Outer-Join is used to fetch the records from the left relation even if there are no matches in the right relation.

Syntax:

grunt> alias = JOIN left-alias BY left-alias-column [LEFT|RIGHT|FULL] [OUTER], right-alias BY right-alias-column [USING 'replicated' | 'bloom' | 'skewed' | 'merge'] [PARTITION BY partitioner] [PARALLEL n];

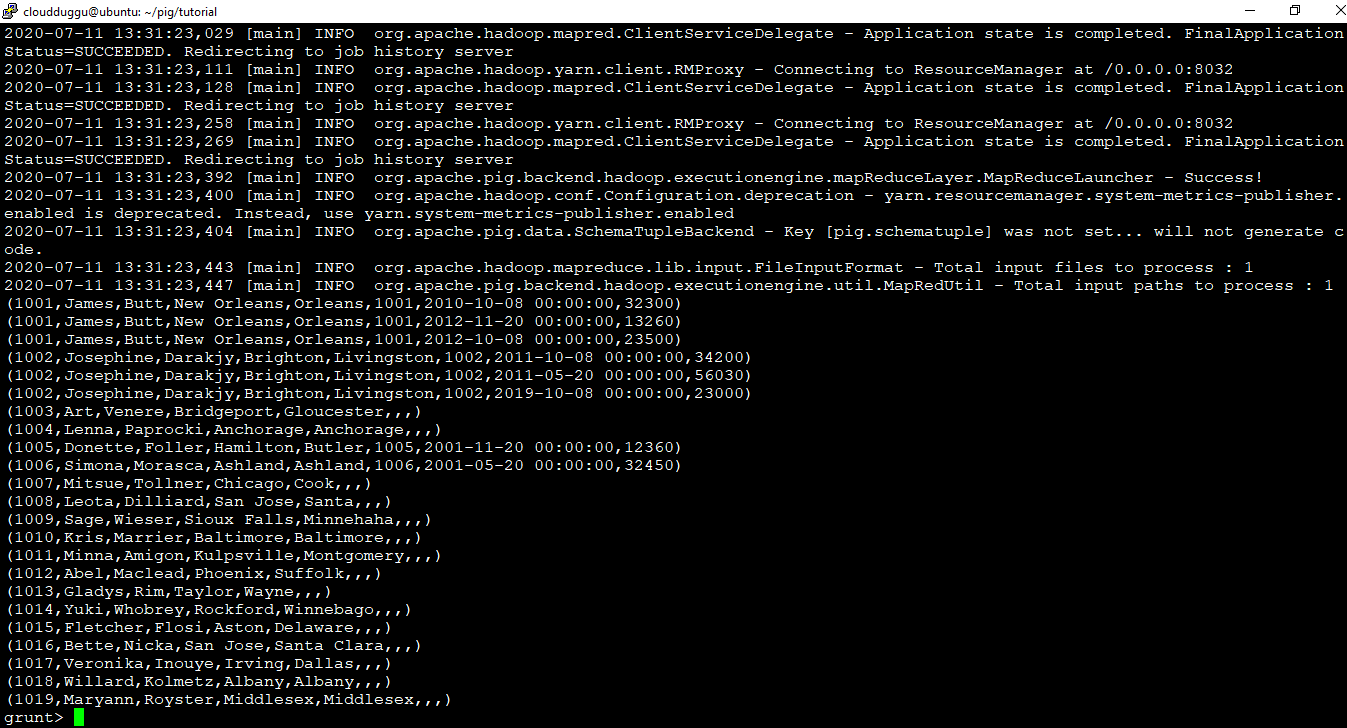

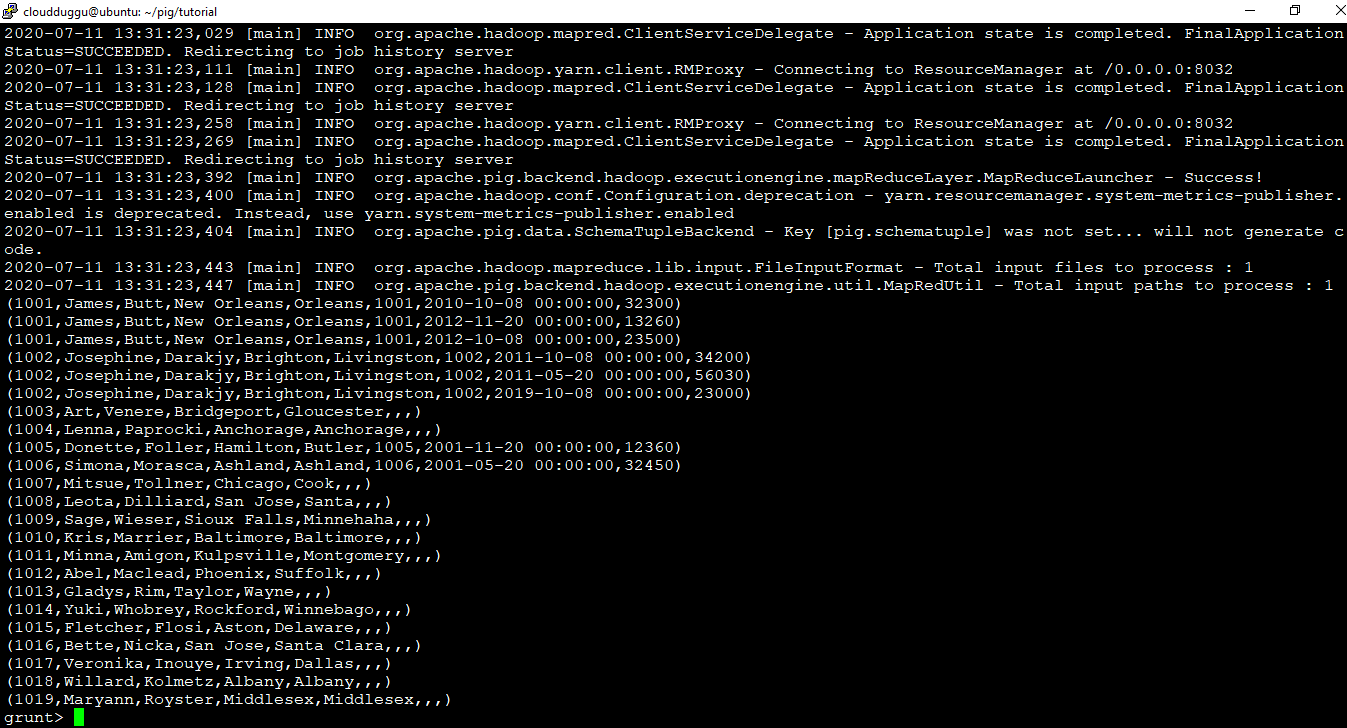

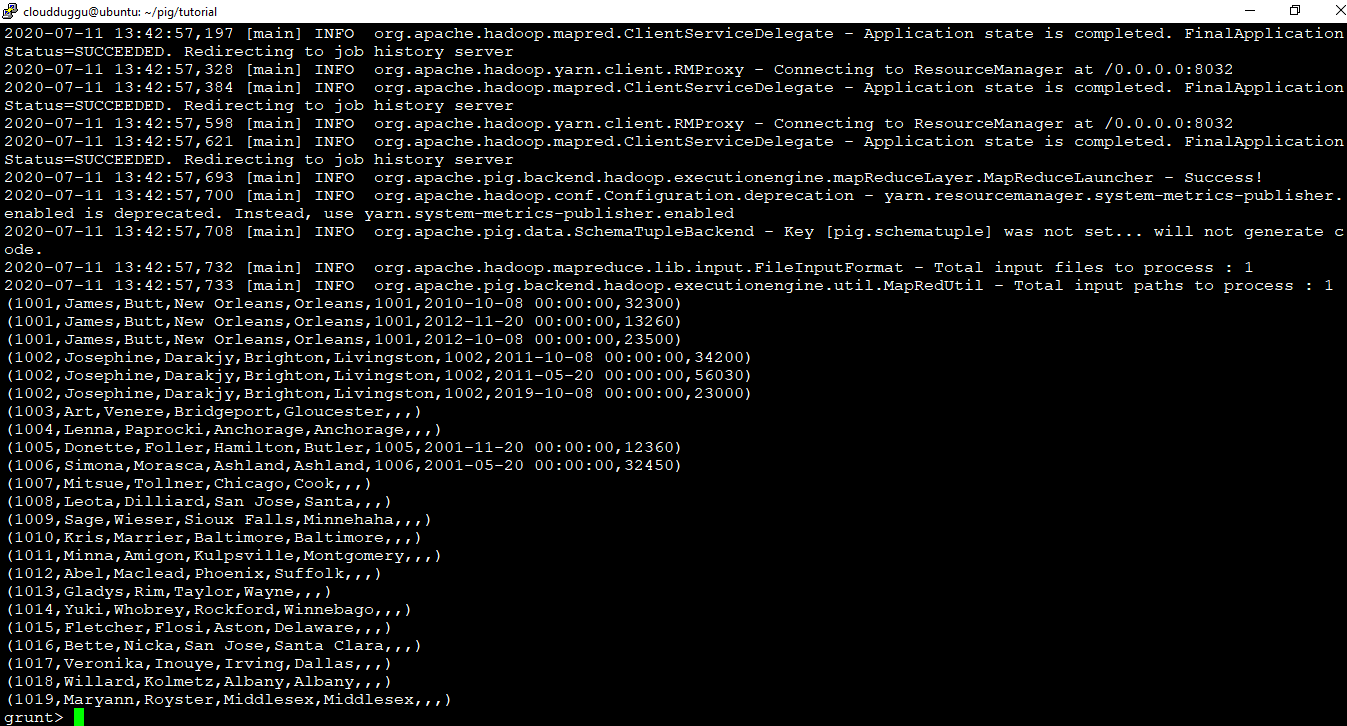

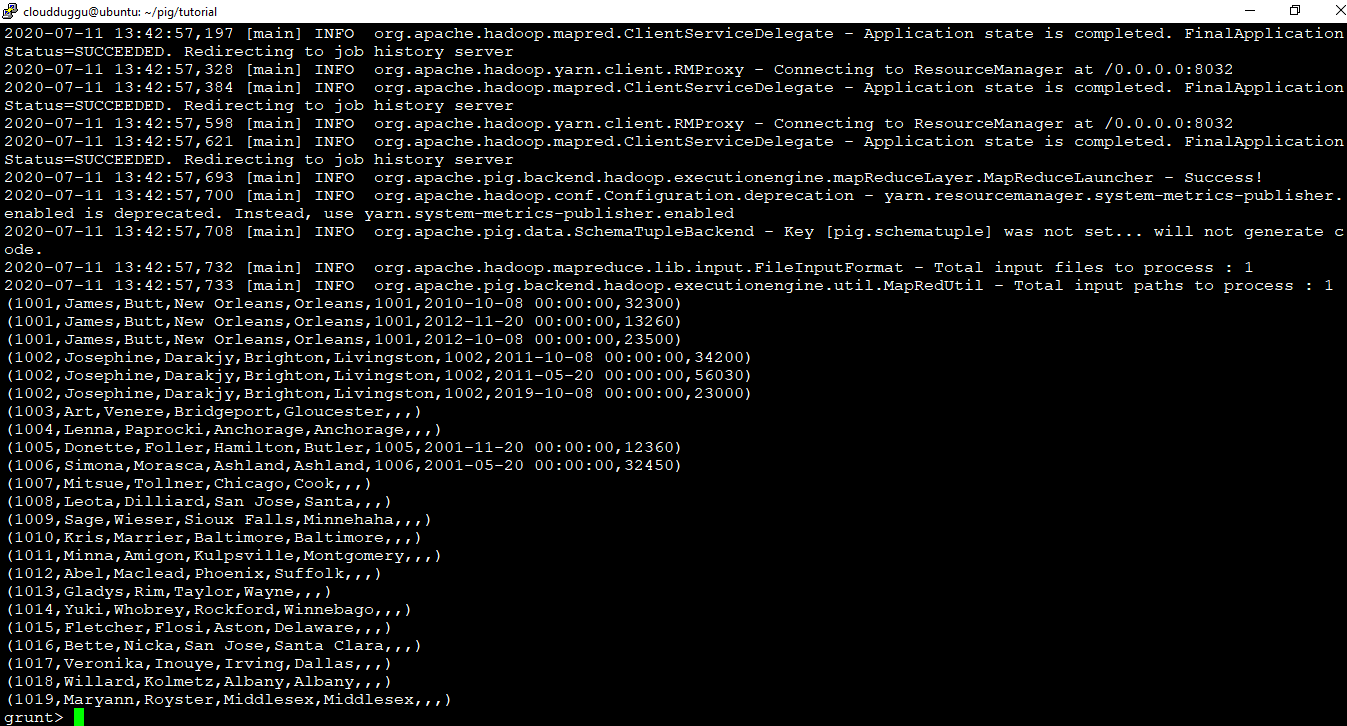

Now we will perform the Left Outer-Join operation on two relations “empdata” and “invdata” and we will print output on-screen using the DUMP operator.

Command:

grunt> leftouterexample = JOIN empdata BY empid LEFT OUTER, invdata BY custid;

grunt> DUMP leftouterexample;

Output:

3.4 Right Outer-Join

3.4 Right Outer-Join

Right Outer-Join is used to fetch the records from the right relation even if there are no matches in the left relation.

Syntax:

grunt> alias = JOIN left-alias BY left-alias-column [LEFT|RIGHT|FULL] [OUTER], right-alias BY right-alias-column [USING 'replicated' | 'bloom' | 'skewed' | 'merge'] [PARTITION BY partitioner] [PARALLEL n];

Now we will perform the Right Outer-Join operation on two relations “empdata” and “invdata” and we will print output on-screen using the DUMP operator.

Command:

grunt> rightouterexample = JOIN empdata BY empid RIGHT OUTER, invdata BY custid;

grunt> DUMP rightouterexample;

Output:

3.5 Full Outer-Join

3.5 Full Outer-Join

Left Outer-Join is used to fetch the records when there is a match in any relation.

Syntax:

grunt> alias = JOIN left-alias BY left-alias-column [LEFT|RIGHT|FULL] [OUTER], right-alias BY right-alias-column [USING 'replicated' | 'bloom' | 'skewed' | 'merge'] [PARTITION BY partitioner] [PARALLEL n];

Now we will perform Full Outer-Join operation on two relations “empdata” and “invdata” and we will print output on-screen using the DUMP operator.

Command:

grunt> fullouterexample = JOIN empdata BY empid FULL OUTER, invdata BY custid;

grunt> DUMP fullouterexample;

Output:

4. CROSS Operator

4. CROSS Operator

CROSS operator is used to calculating cross-product(Cartesian product) of the two or more relations.

Syntax:

grunt> alias = CROSS alias, alias [, alias …] [PARTITION BY partitioner] [PARALLEL n];

Command:

grunt> crossjoinexample = CROSS empdata , invdata;

grunt> DUMP crossjoinexample;

Output:

grunt> DUMP crossjoinexample;