Apache Flume Data flow Model

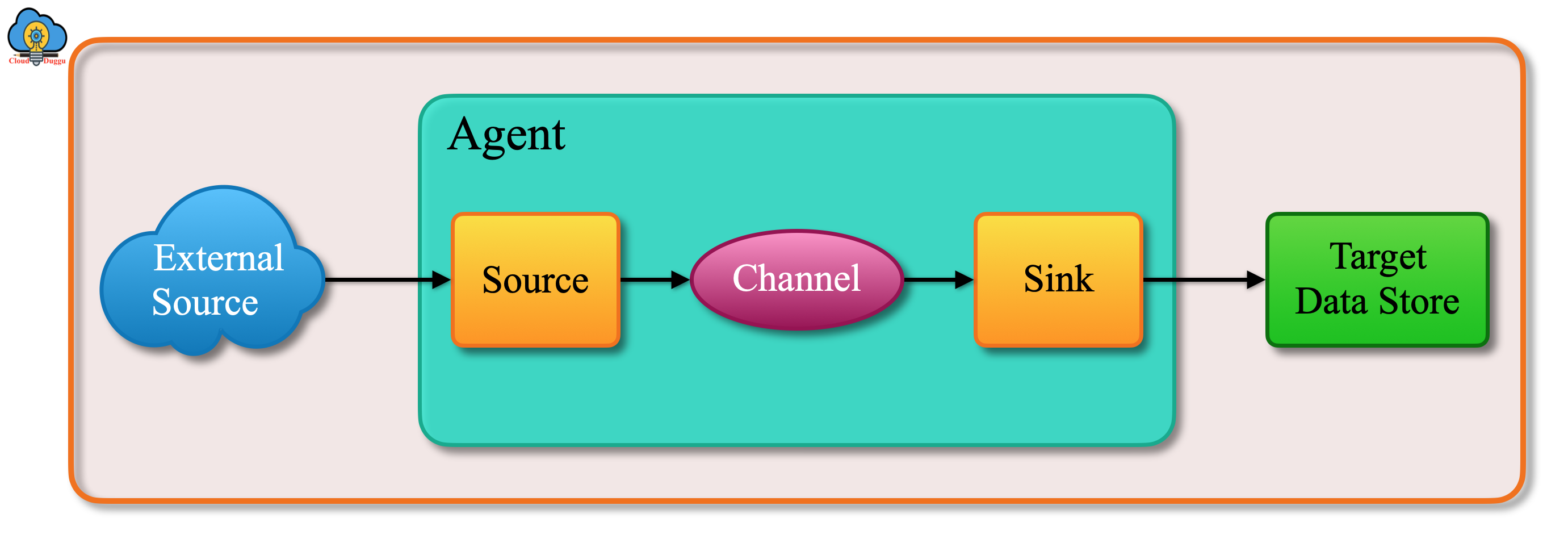

Data flow in Apache Flume starts with a client. The client starts sending an event to its destination system that is received by the Apache Flume source. Flume source stores it into one or more channels. Now the events are stored in channel unless it is taken by Flume sink. The sink removes the event from the channel and puts it into a target data store like HDFS (via Flume HDFS sink).

The following diagram shows the data flow of Apache Flume.

Apache Flume Data Flow Types

Let us see data flow types provided by Flume.

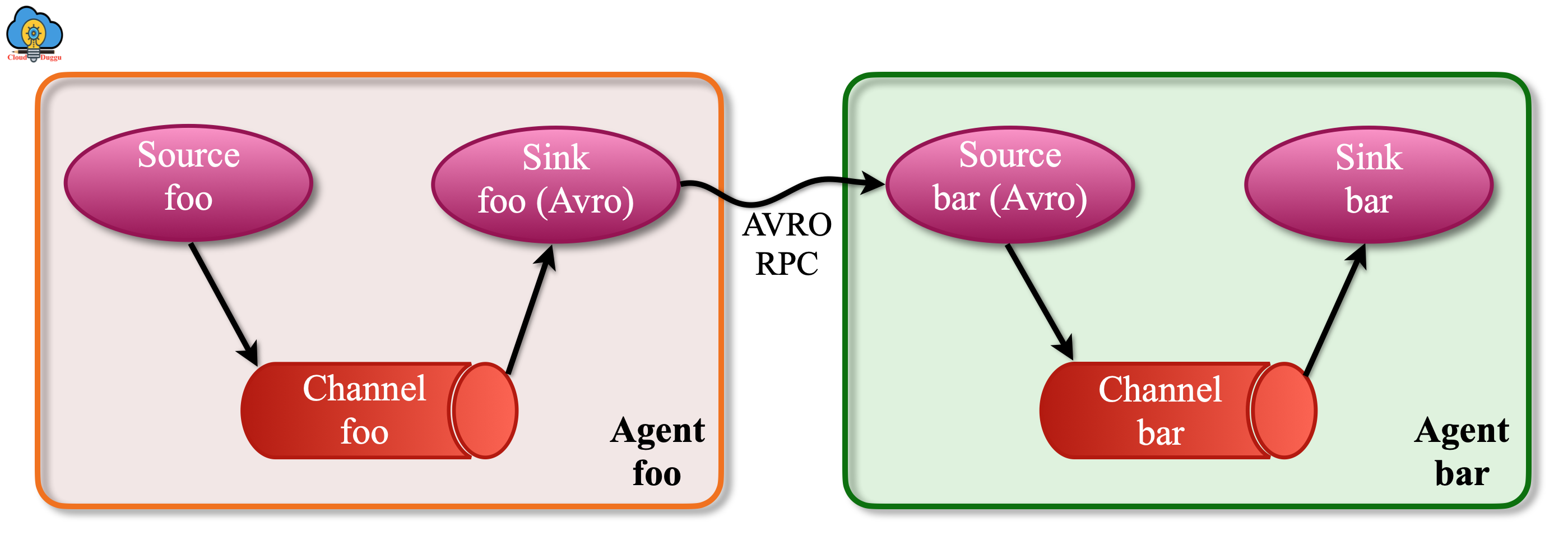

1. Multi-hop flow

In this type of data flow, a user can build multi-hop flows where events will travel through multiple agents before reaching the final destination. In the case of a multi-hop flow, data travels from the previous-hop sink to the next source hop and stored in the next-hop channel.

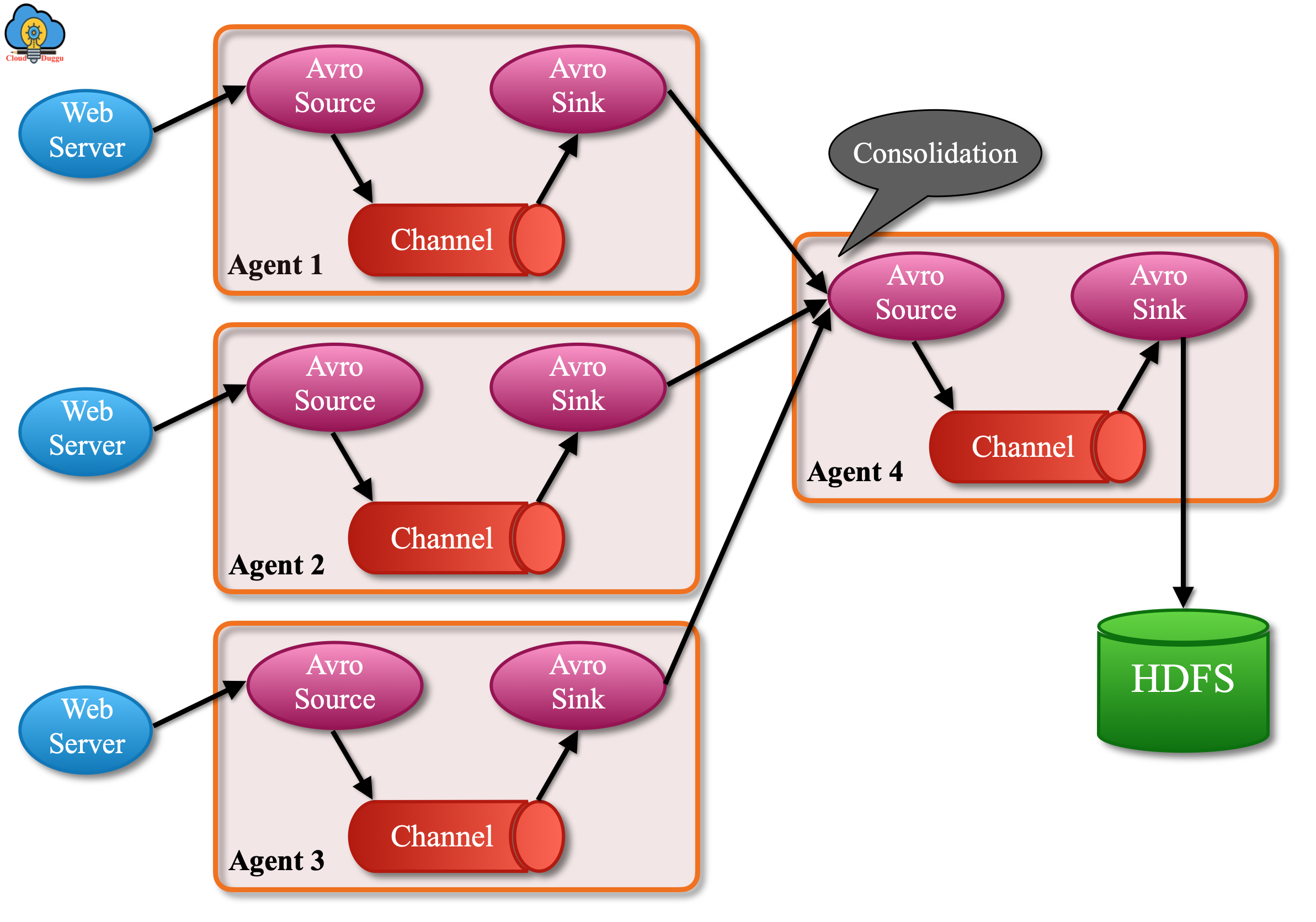

2. Fan-in flow

In this type of data flow, events from multiple sources can travel to their destination using a single channel.

We can take an example of a log collection system in which logs are collected from hundreds of web servers and sent to a dozen of agents that write to the HDFS cluster. Flume can achieve this by configuring several first-tier agents with an Avro sink, all will point to an Avro source of a single agent. Now this source on the second tire agent will consolidate events in a single channel which is used by the sink to its final store system.

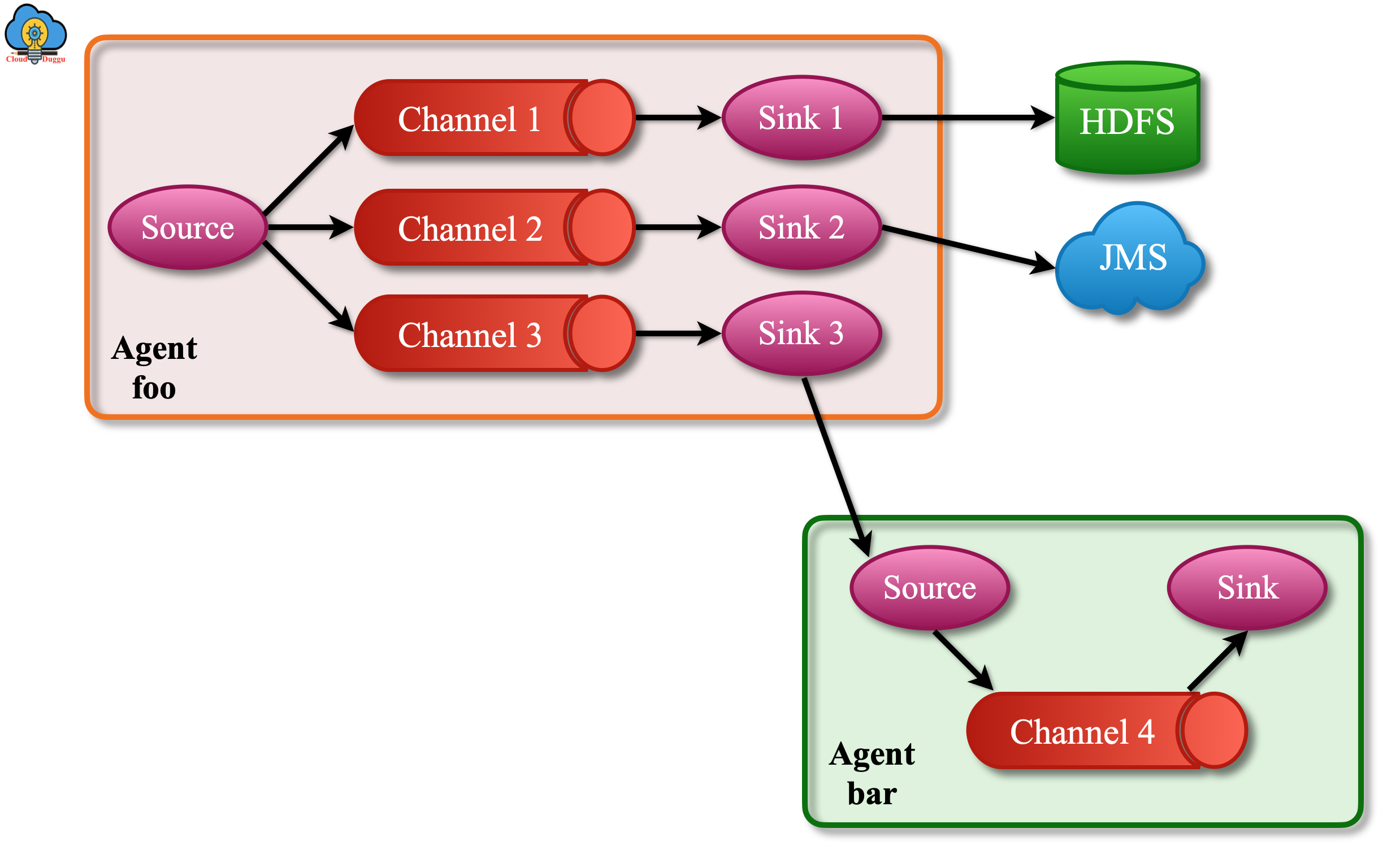

3. Fan-out flow

In this type of data flow, event flow from one source to multiple channels is achieved by defining a flow multiplexer that can replicate an event to one or more channels.

Fan-out flow provides two modes of data flow.

- Replicating: In this mode, event data is replicated to all channels.

- Multiplexing: In this mode, event data will be sent to selected channels when an event’s attribute matches a preconfigured value. The mapping can be set in the agent’s configuration file.