Apache Spark RDD Operations

Apache Spark RDD supports two types of operations the first one is “Transformations” and the second one is “Actions”.Transformations create a new RDD from the existing RDD by applying Transformation functions and when we want to do some operation on that RDD then we call Actions and it returns the result.

In this tutorial, we will learn about RDD Transformations and Actions like what is Transformations in RDD and its various methods with examples and will learn what is actions and its methods with examples.

Spark RDD provides the following two types of operations.

Let us understand each operation in detail.

1. Transformations

RDD transformations are the methods that we apply to a dataset to create a new RDD. It will work on RDD and create a new RDD by applying transformation functions. The newly created RDDs are immutable in nature and can’t be changed. All transformations in Spark are lazy in nature that means when any transformation is applied to the RDD such as map (), filter (), or flatMap(), it does nothing and waits for actions and when actions like collect(), take(), foreach() invoke it does actual transformation/computation on the result of RDD.

There are two types of Transformations.

A. Narrow Transformations

B. Wide Transformations

Let us understand each operation in detail.

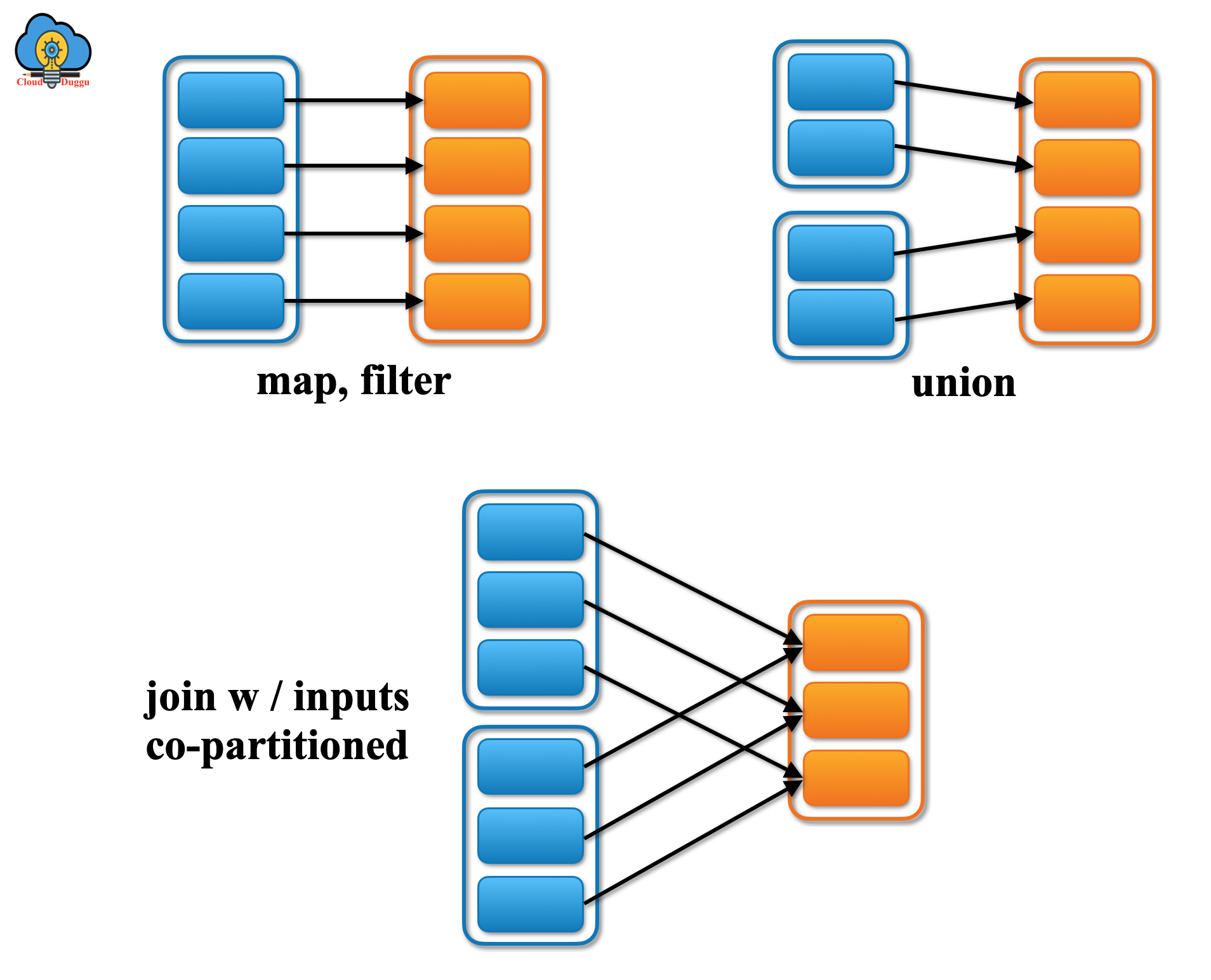

A. Narrow Transformations

In narrow transformations, each partition of the parent RDD is used by at most one partition of the child RDD and it is the result of methods like map(), filter() and union(), etc.

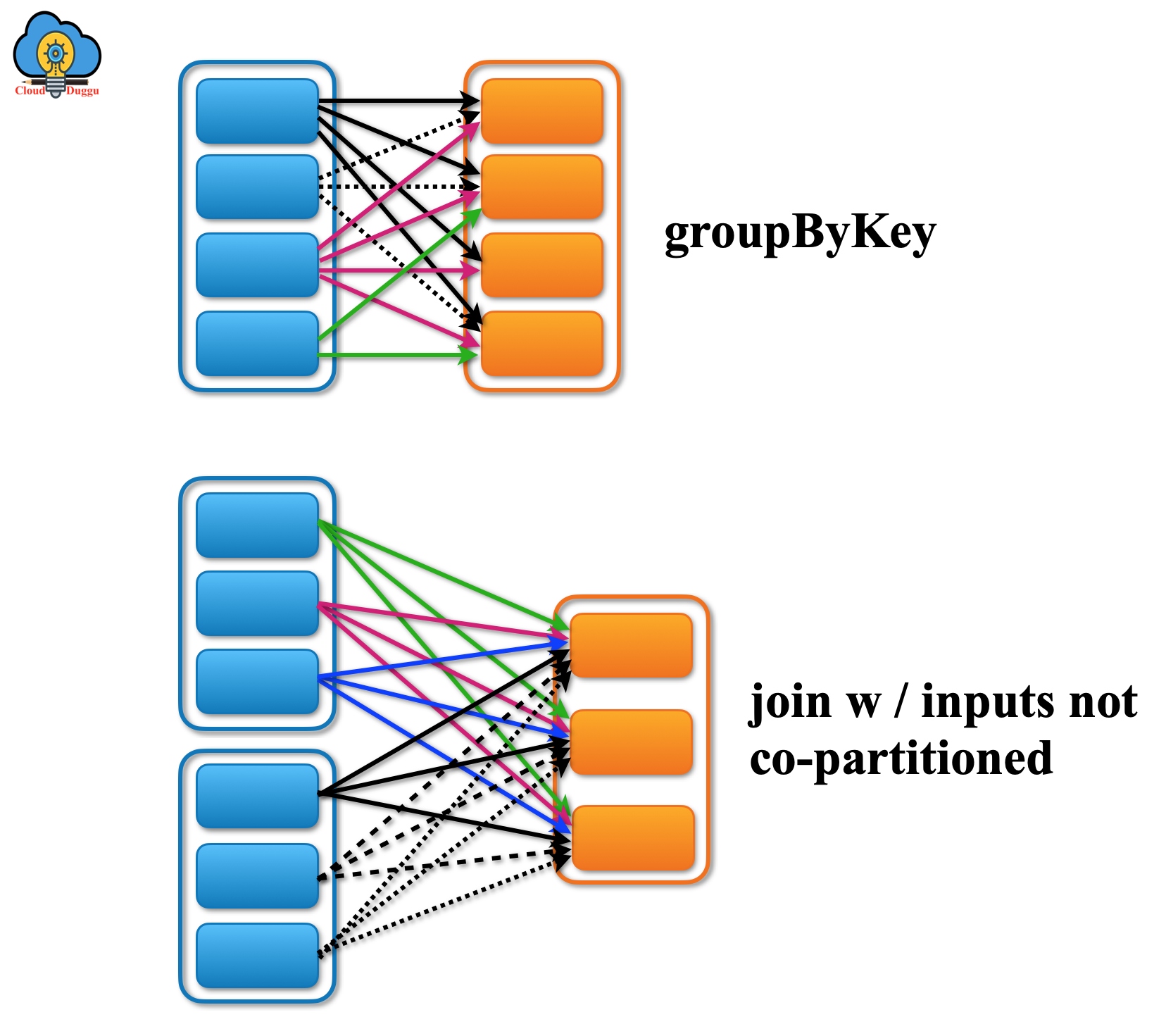

B. Wide Transformations

In wide transformations, each multiple child RDD partitions may depend on a single parent RDD partition and it is the result of methods like group by, reduce by, join, etc.

There are many transformation methods present for Spark RDD.

Let us see each with an example.

Start Spark shell using the below command (Please check your Spark directory).

/home/cloudduggu/spark$spark-shell

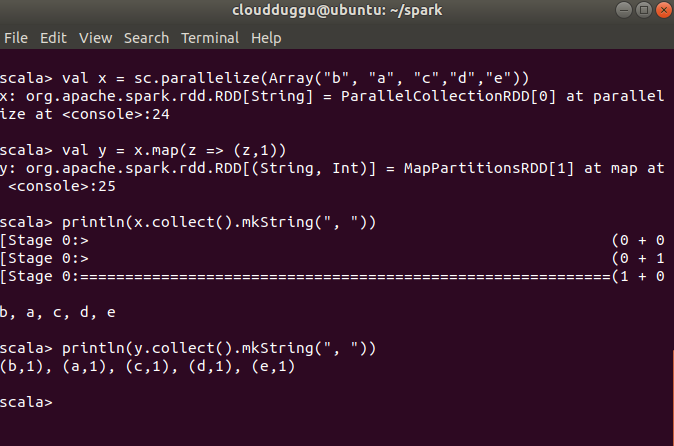

map()

It will return a new distributed dataset formed by passing each element of the source through a function.

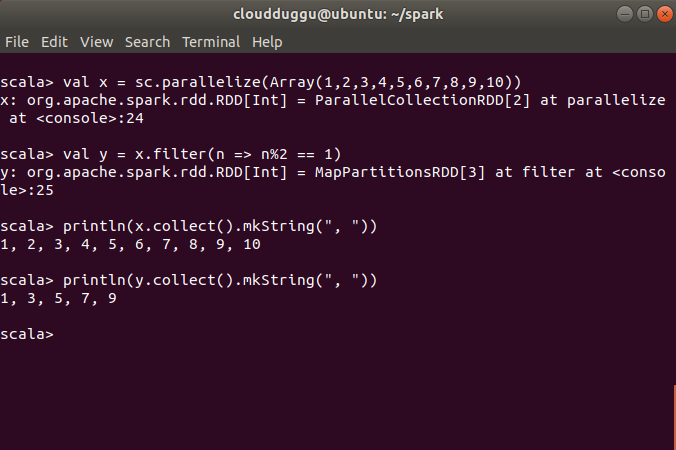

filter()

It will return a new dataset formed by selecting those elements of the source on which function returns true.

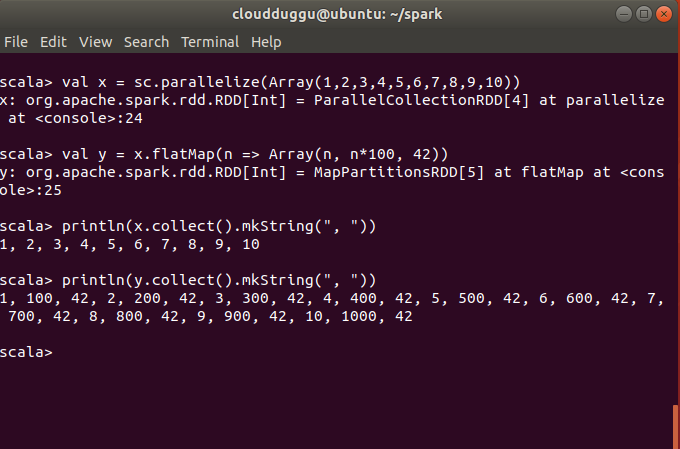

flatMap()

It is similar to the map, but each input item can be mapped to 0 or more output items (so the function should return a Seq rather than a single item).

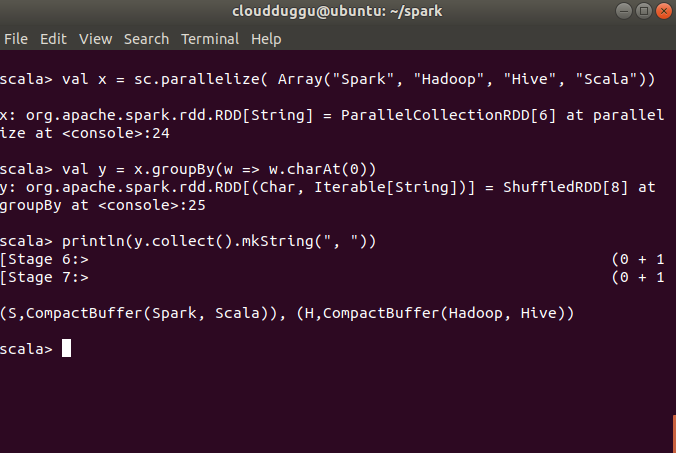

groupByKey()

It will group the data in the original RDD. Create pairs where the key is the output of a user function, and the value is all items for which the function yields this key.

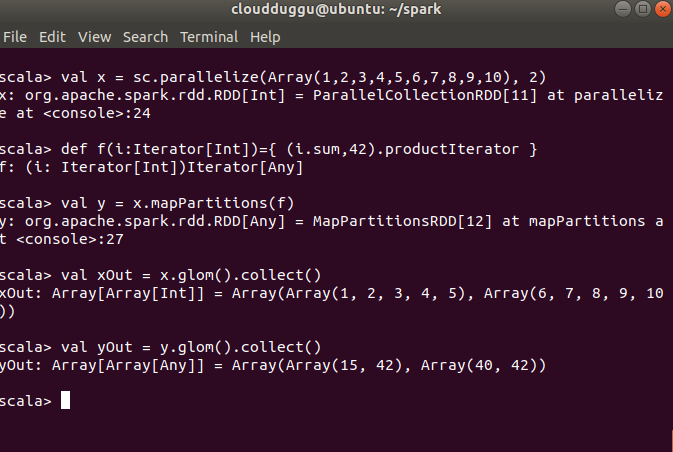

mappartitions()

It is similar to the map but runs separately on each partition (block) of the RDD.

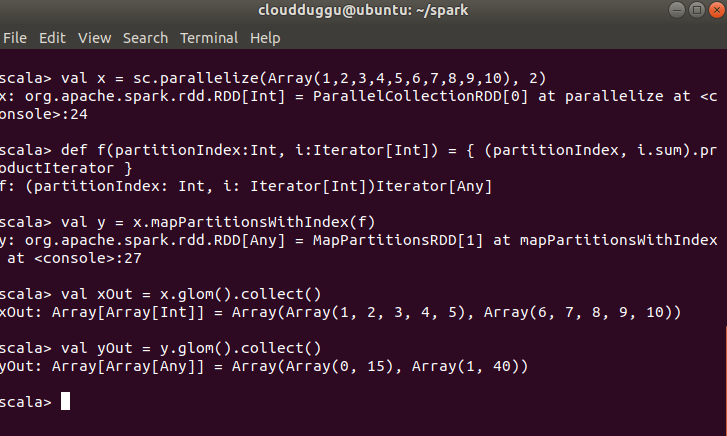

mapPartitionsWithIndex()

It will return a new RDD by applying a function to each partition of this RDD while tracking the index of the original partition.

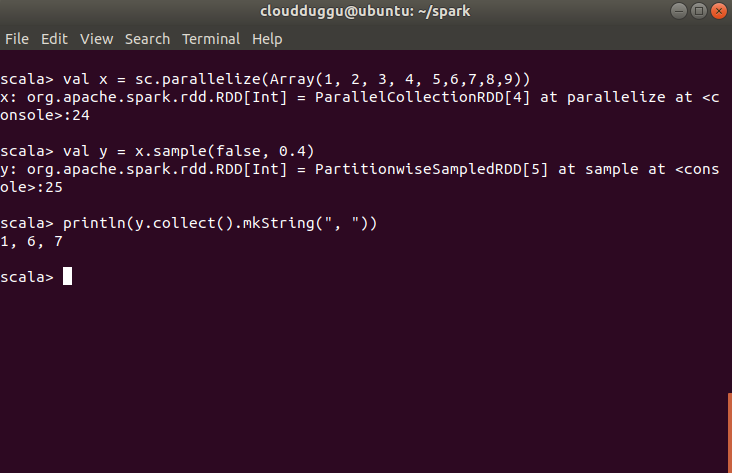

sample()

It will return a new RDD containing a statistical sample of the original RDD.

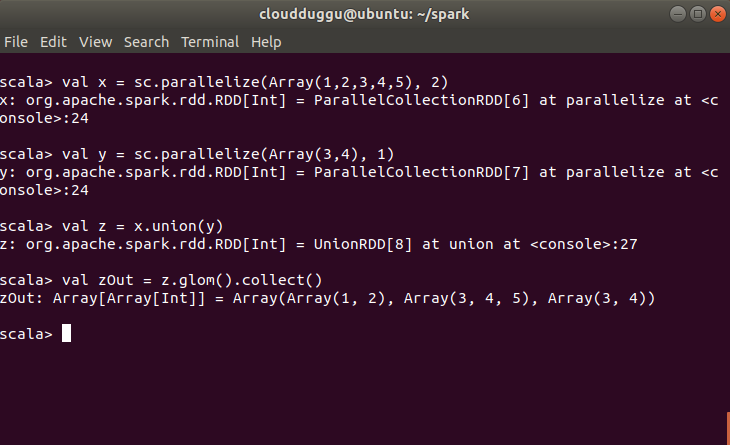

union()

It returns a new dataset that contains the union of the elements in the source dataset and the argument.

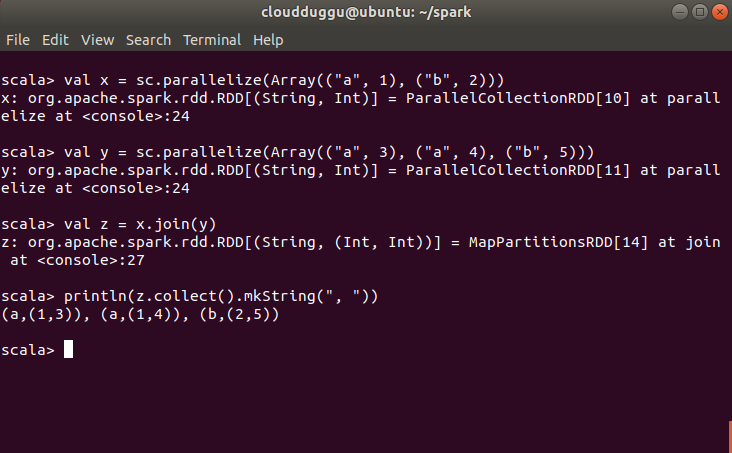

join()

It returns a new RDD containing all pairs of elements having the same key in the original RDDs.

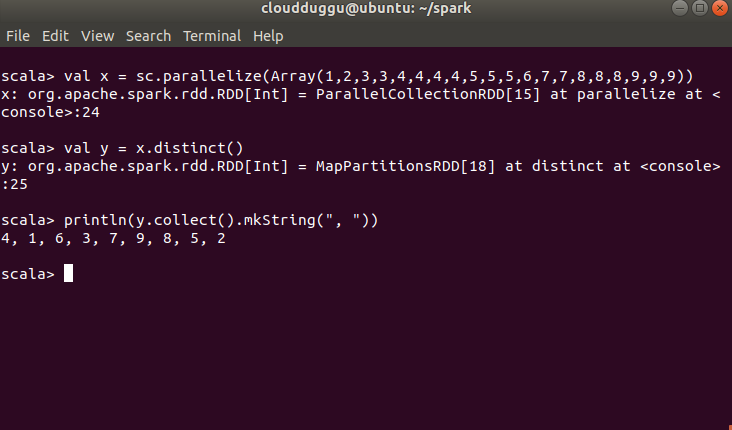

distinct()

It returns a new RDD containing distinct items from the original RDD (omitting all duplicates).

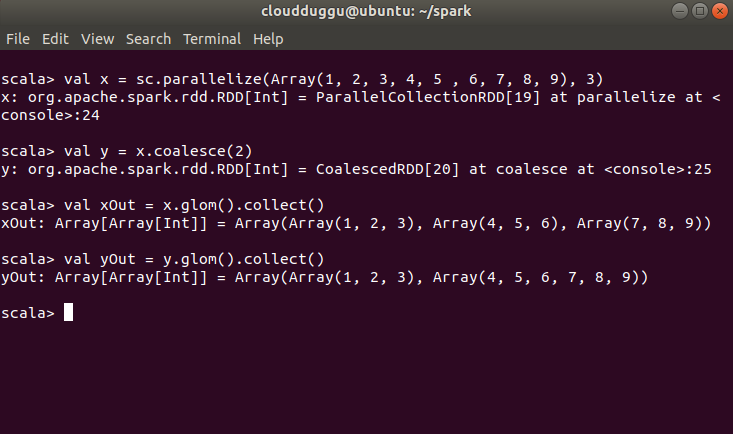

coalesce()

It returns a new RDD which is reduced to a smaller number of partitions.

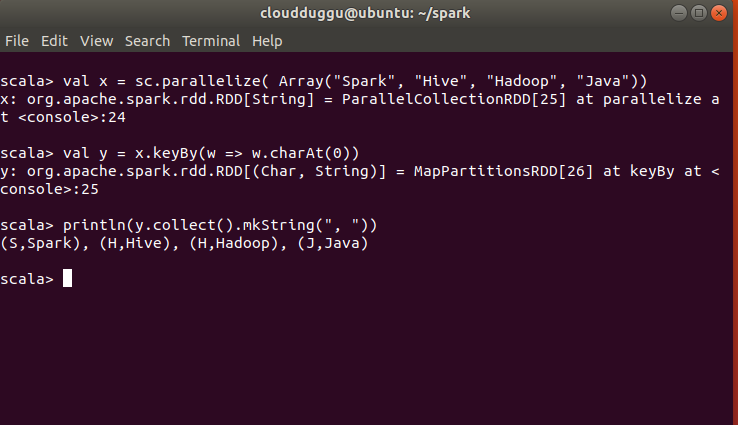

keyby()

It creates a Pair RDD, forming one pair for each item in the original RDD. The pair’s key is calculated from the value via a user-supplied function.

2. Actions

Actions function works on the RDD which is created during the transformation phase. After performing actions the final result is returned to the driver program. For example, the Map function performs the transformation on the dataset and returns new RDD, and the reduce functions perform the aggregation on the newly created RDD and return the result to the driver.

There are many action methods present in Spark RDD.

Let us see each with an example.

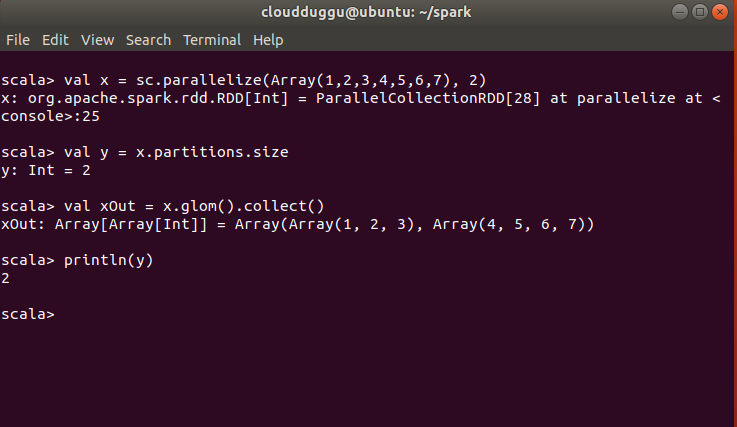

Getnumpartitions()

It returns the number of partitions in RDD.

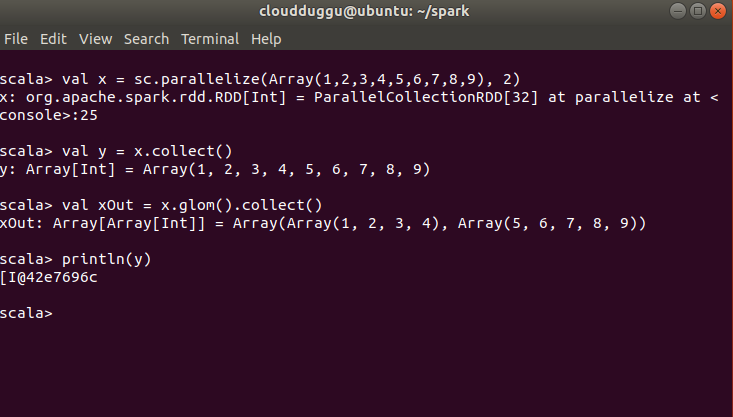

collect()

It returns all items in the RDD to the driver in a single list.

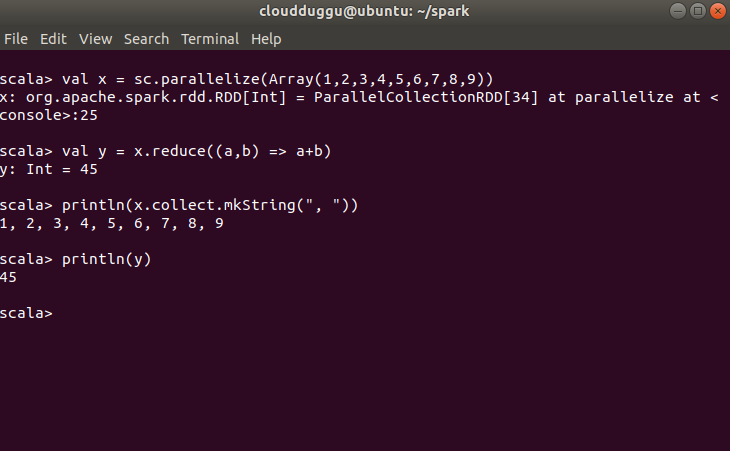

reduce()

It will aggregate all the elements of the RDD by applying a user function pairwise to elements and partial results and returns a result to the driver.

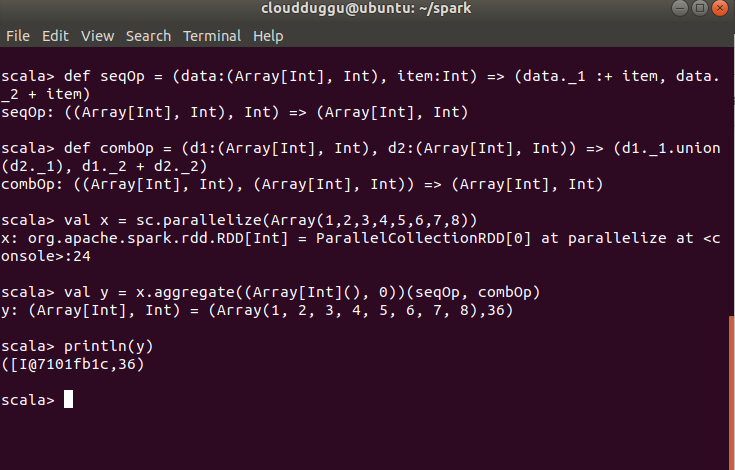

aggregate()

It aggregates all the elements of the RDD by applying a user function to combine elements with user-supplied objects, - then combining those user-defined results via a second user function, - and finally returning a result to the driver.

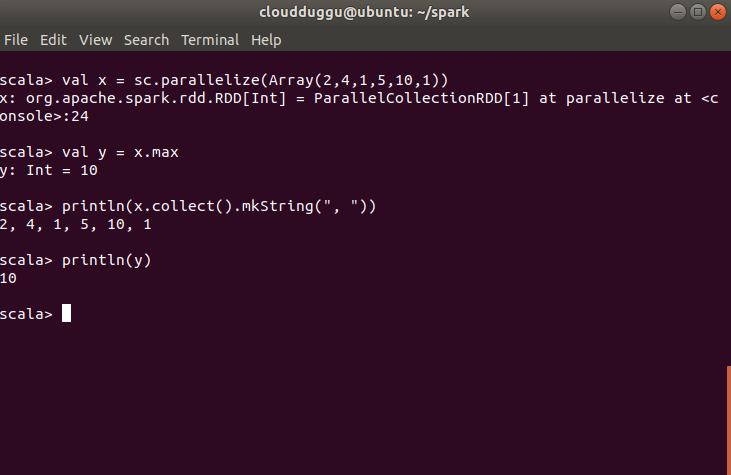

max()

It will return the maximum item in the RDD.

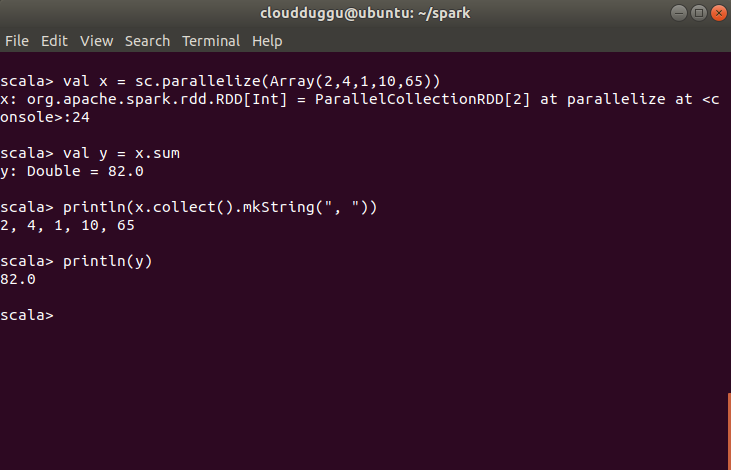

sum()

It will return the sum of the items in the RDD.

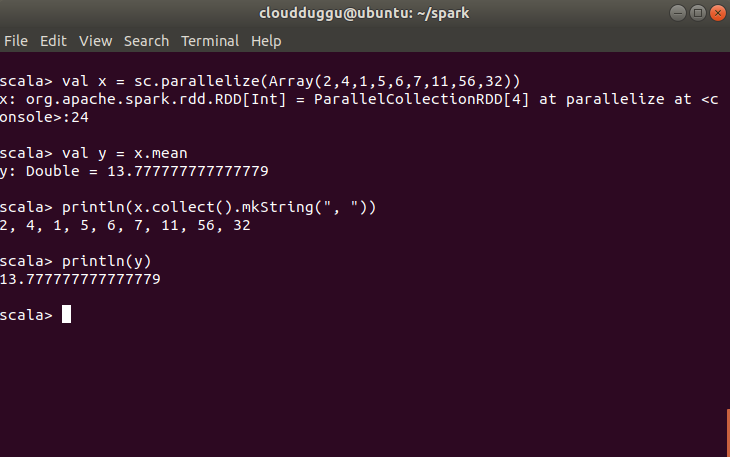

mean()

It will return the mean of the items in the RDD.

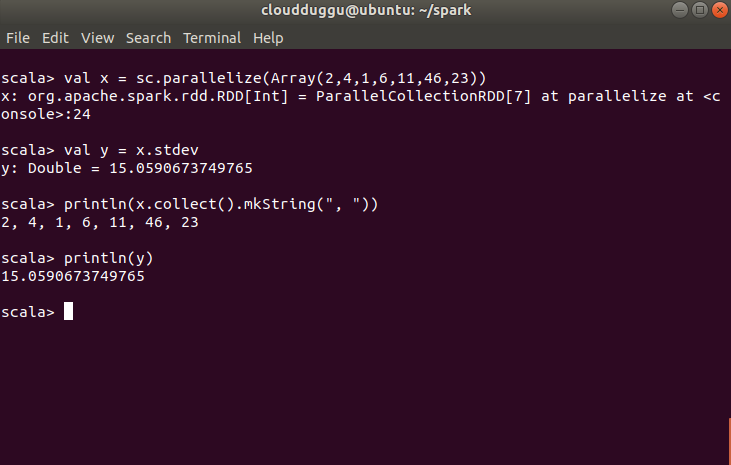

stdev()

It returns the standard deviation of the items in the RDD.

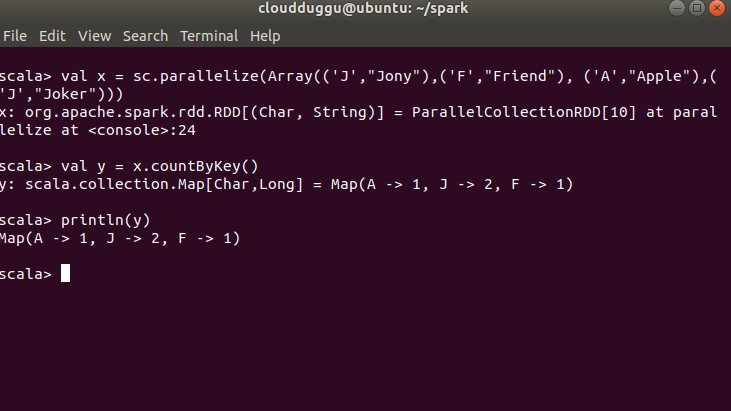

countbykey()

It returns the standard deviation of the items in the RDD.