1. Project Idea

1. We have taken an example of NSE Stock Exchange LTP(Last Traded Price) Data flow for this Spark Streaming Project.

2. In this project, we have used the Stock Exchange LTP CSV data file for streaming analysis.

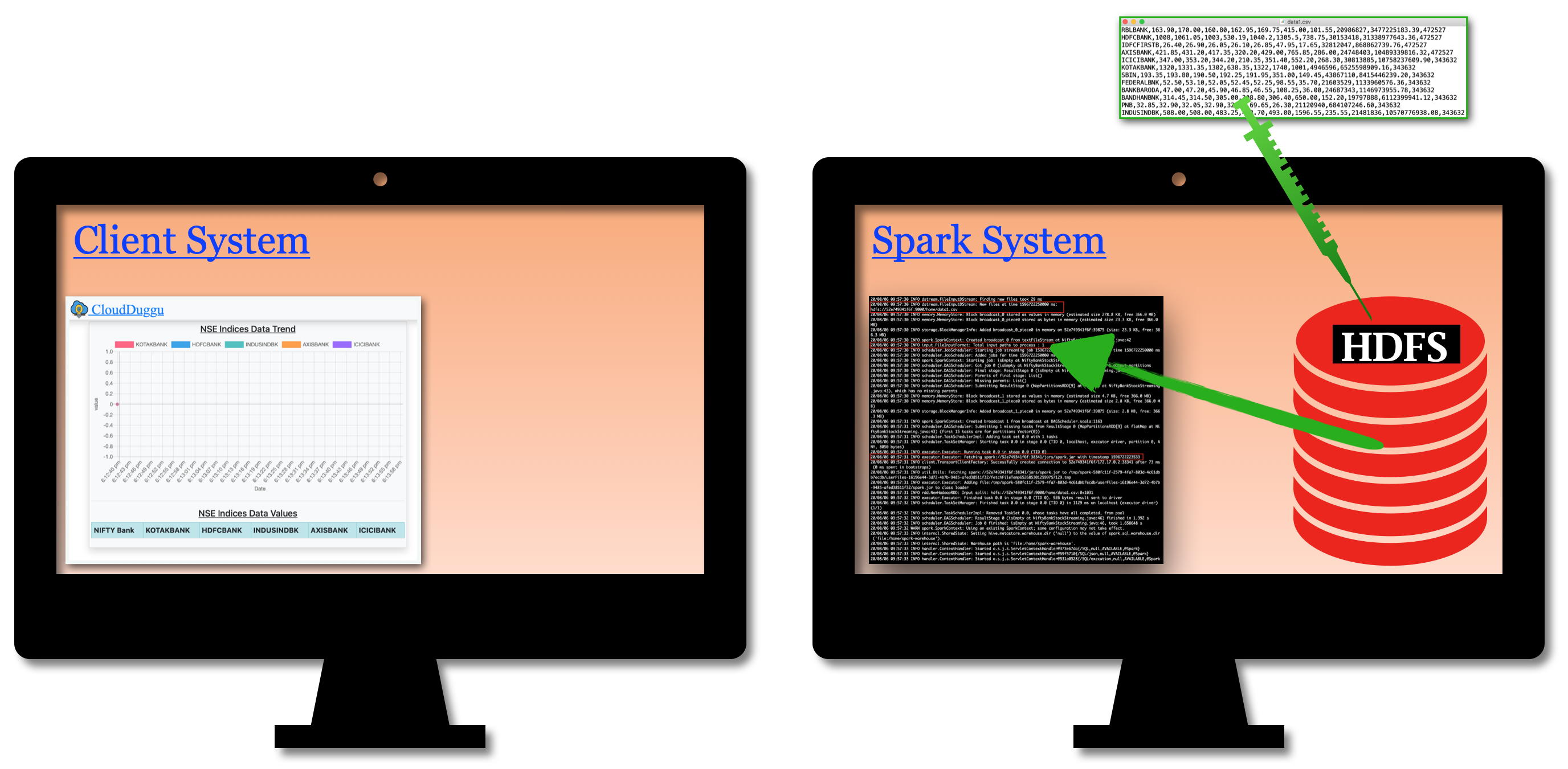

3. We will use a shell script to copy CSV data from the local system to Hadoop HDFS in a continuous manner.

4. Spark streaming java project will run on the Spark system and it will create a data stream connection with HDFS.

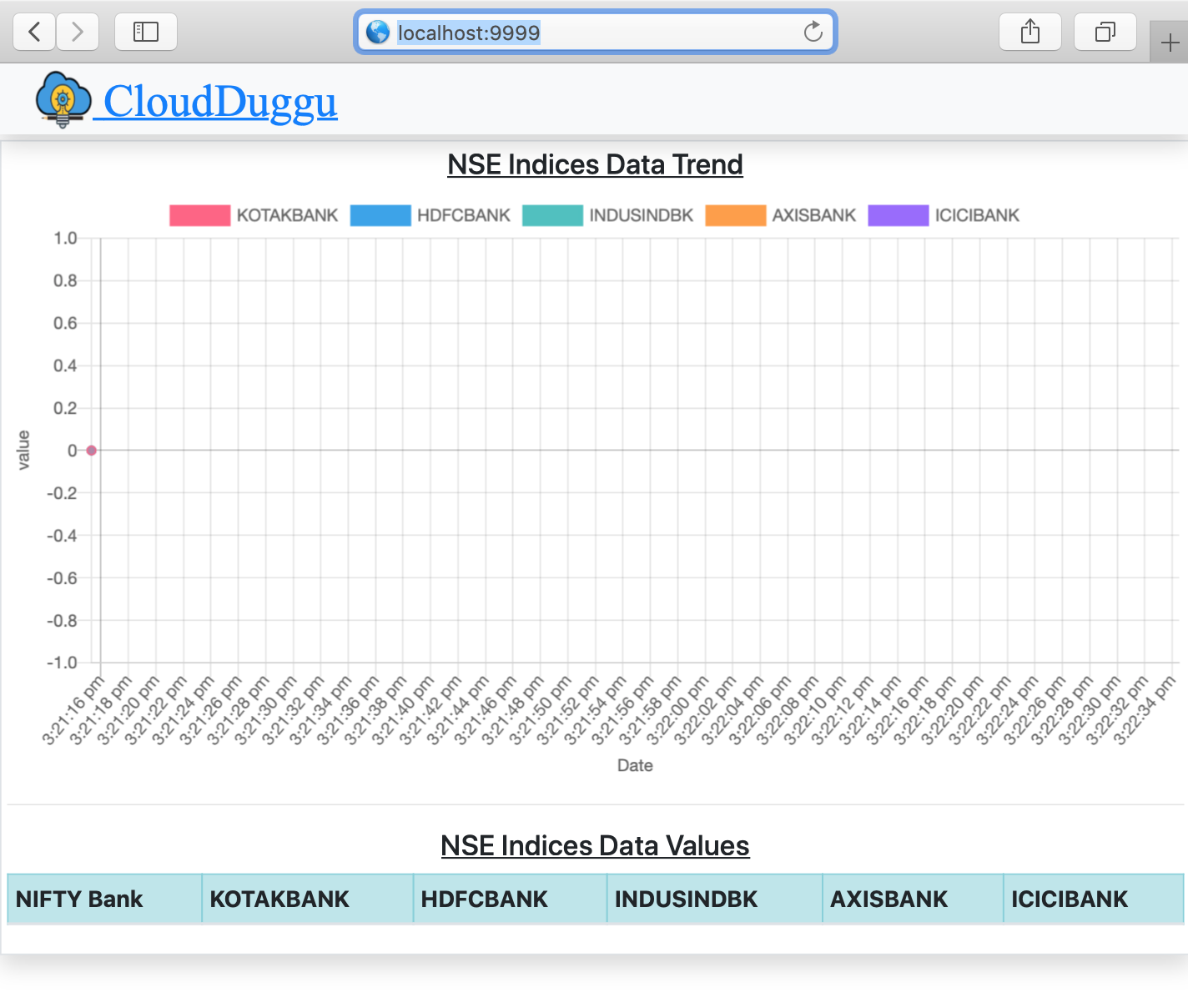

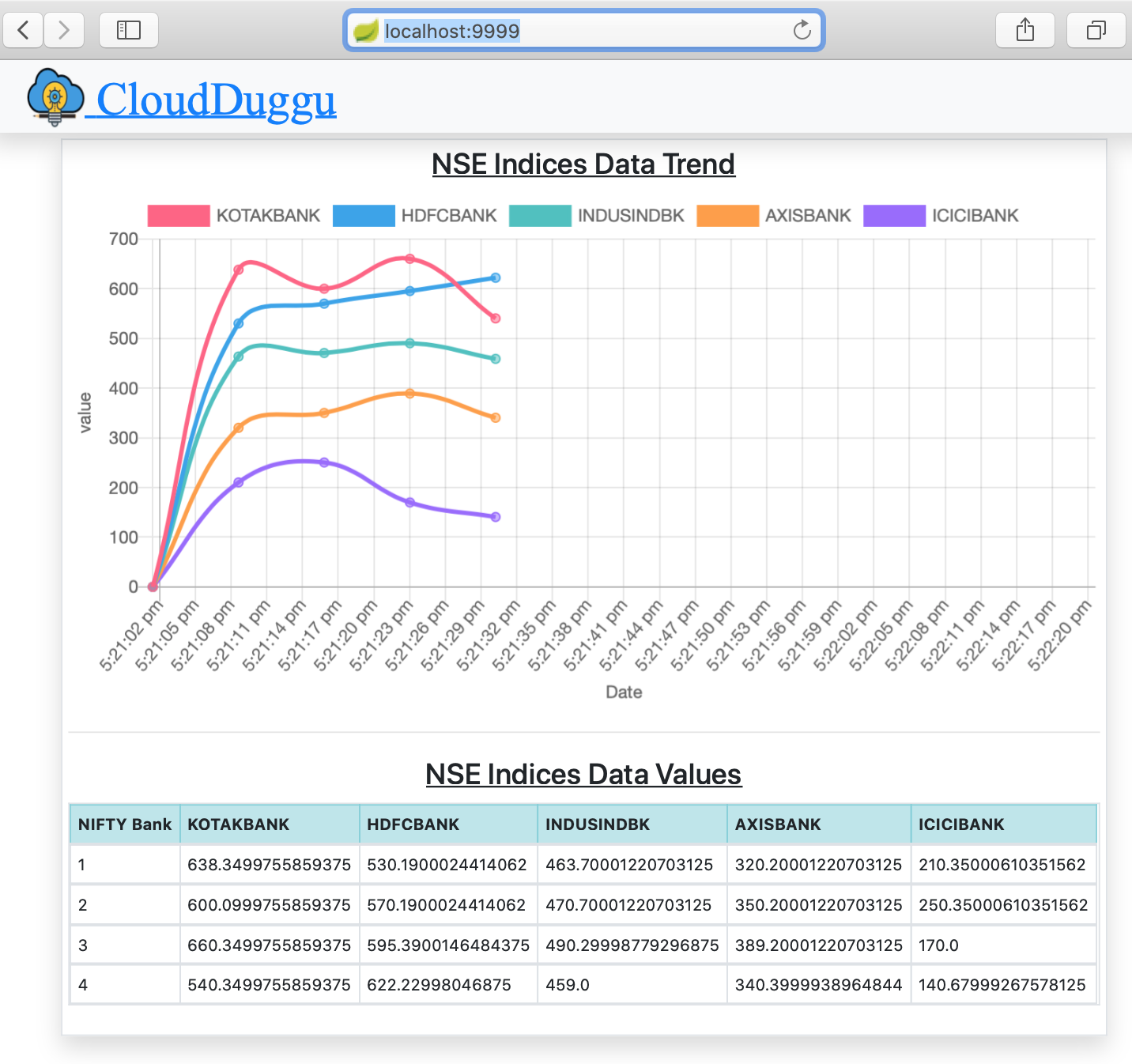

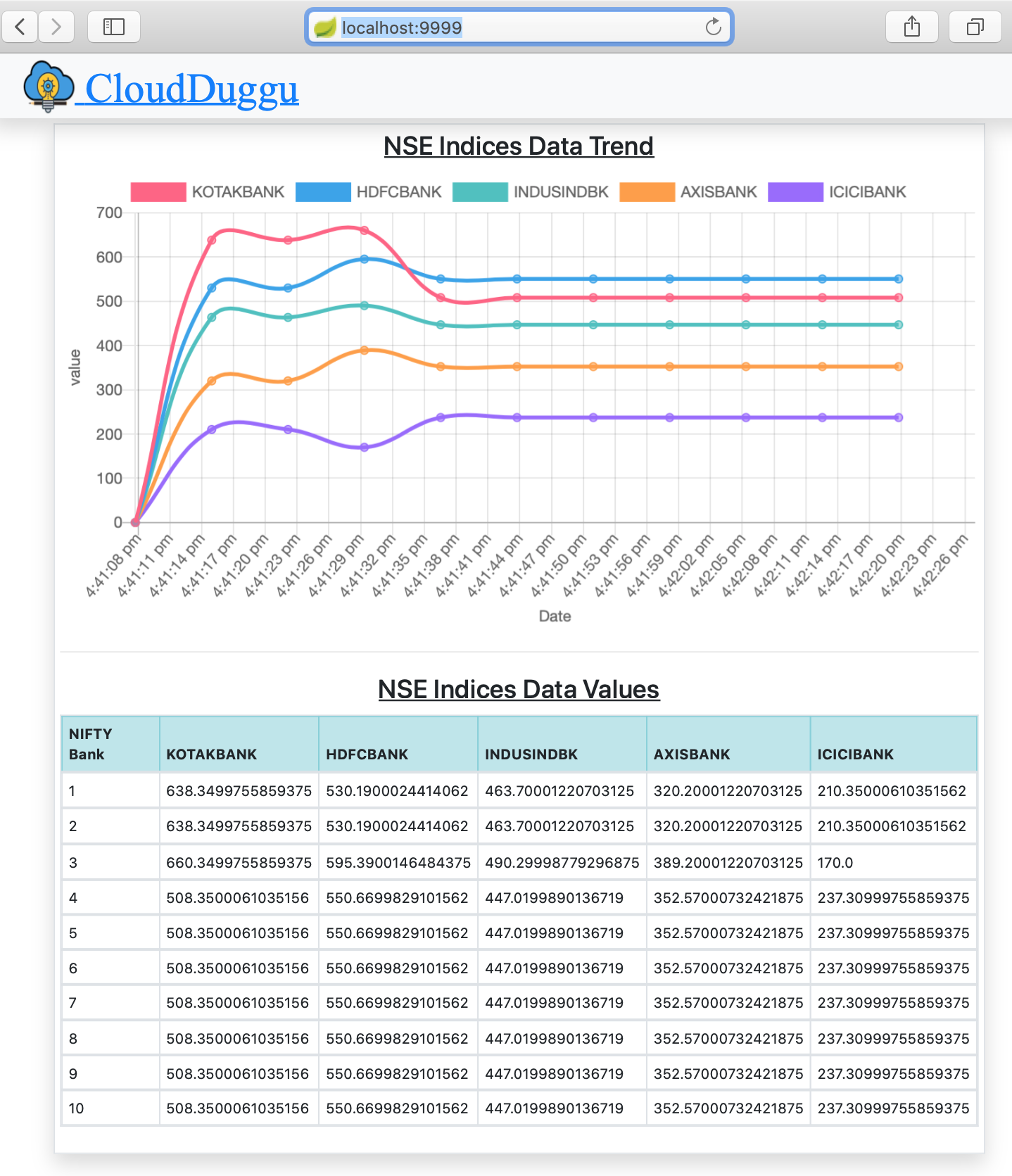

5. Spring Java Client project will run on the Client system and it will show result data in a line chart and tabular format.

2. Spark Project Streaming Workflow

| a. Start the Client system and wait for the resulting output from the Spark system. |  |

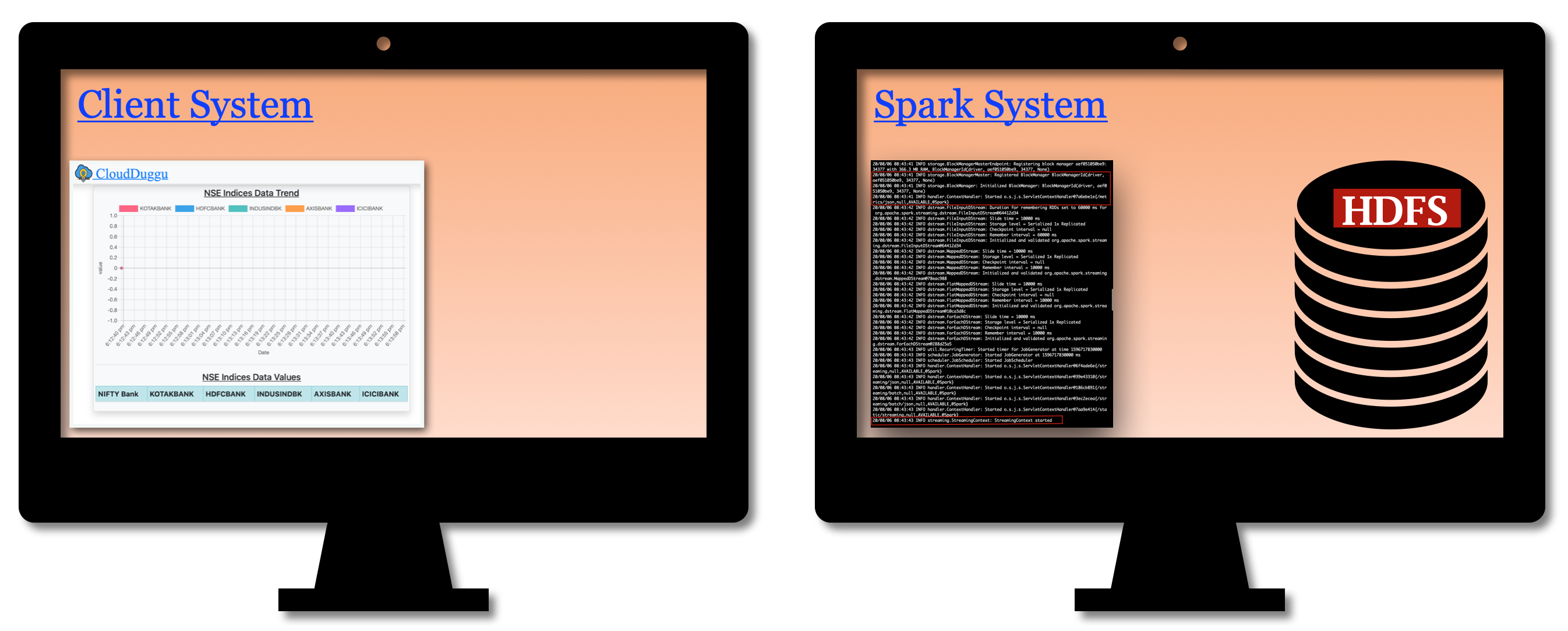

| b. Start the Spark system and execute file streaming from HDFS. |  |

| c. The moment the data file will be pushed to HDFS, the spark will fetch it and start executing logic. |  |

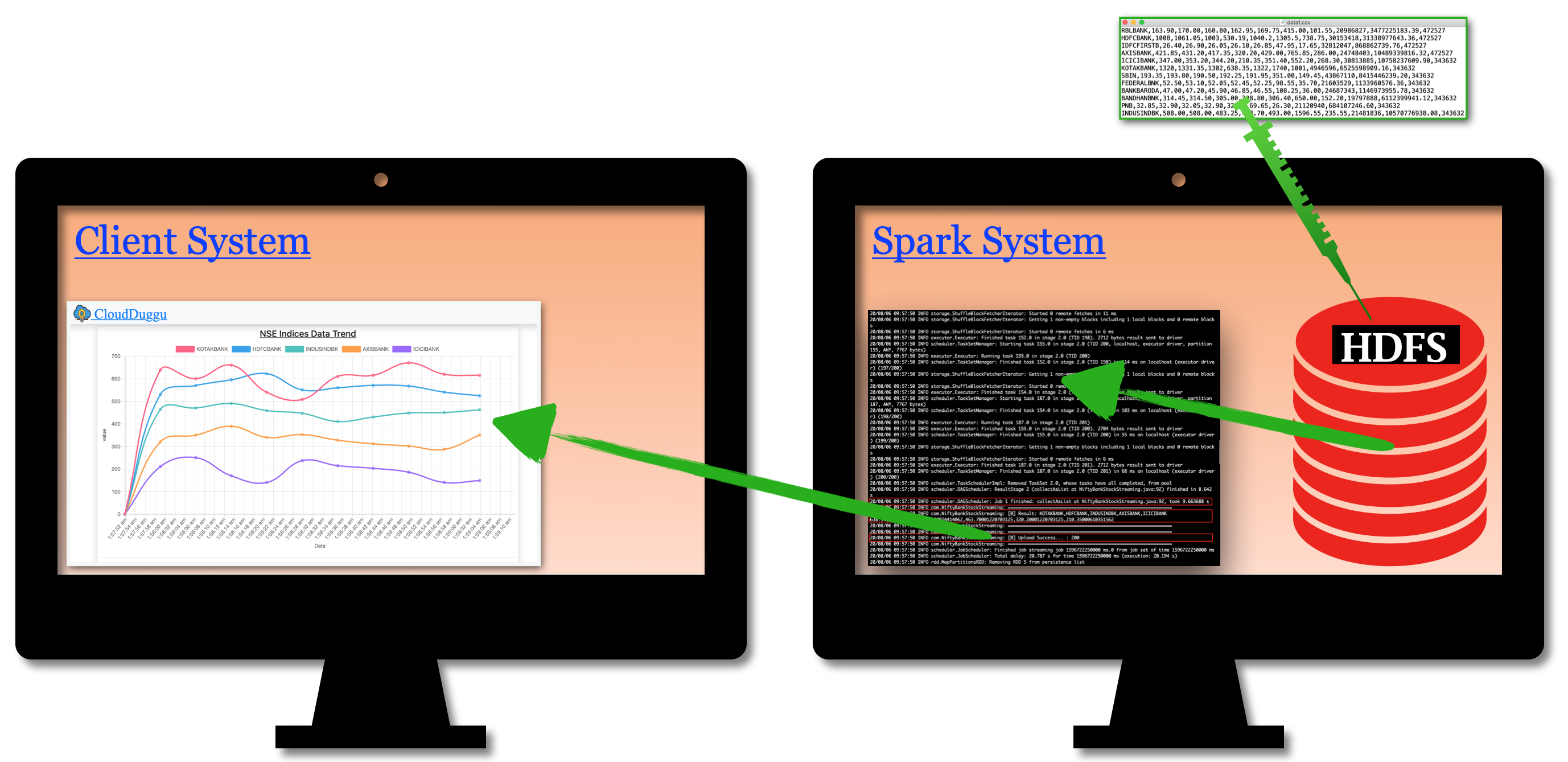

| d. After successful execution of the program, the Spark system will upload the result to the Client system. After that, the Client system will show the result in a chart. |  |

3. Building Of Project

To run this project you can install VM (Virtual Machine) on your local system and configured Spark on that. After this configuration, your local system will work as a client system and Spark VM will work as a Spark system. Alternatively, you can take two systems which are communicating with each other and on one of the system Spark is configured.

Let us see this project in detail...

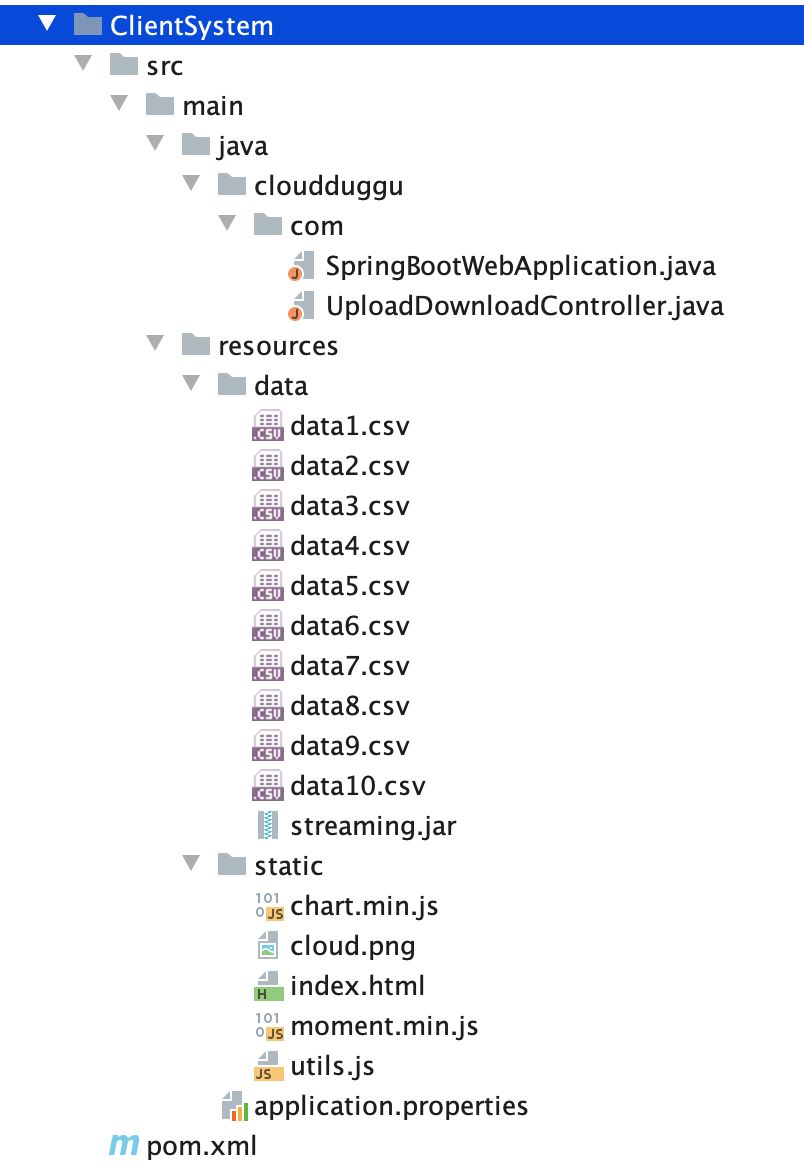

| a. Client System | ||

|---|---|---|

| It is an example of the Spring Boot JAVA Framework. When we will build this project then it will create a "client.jar" executable file. |  |

|

| It has java code, spark executable jar, data files, and static pages (HTML, javascript). | ||

| Java code has 2 files, SpringBootWebApplication.java and UploadDownloadController.java | ||

| SpringBootWebApplication.java is the main project file, which is responsible for building code and running it on an embedded server. | ||

| UploadDownloadController.java is used to provide download & upload URL HTTP services for the Spark system. | ||

| data folder has unprocessed Nifty Bank Stock CSV data files and executable spark project (streaming.jar) file. | ||

| the static folder has HTML pages and dependent js & image files. This is the main client view page which shows the spark process result. | ||

| pom.xml is a project build tool file. This file has java dependencies and builds configuration details. | ||

| For creating the “client.jar” file, use the command "mvn clean package". | ||

| Click Here To Download "ClientSystem" project zip file. | ||

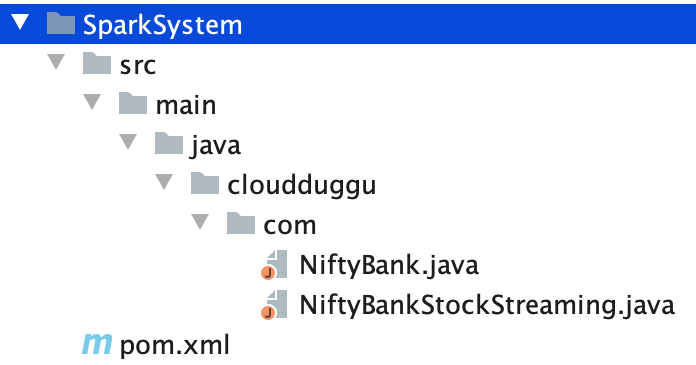

| b. Spark System | ||

|---|---|---|

| It is a JAVA project, when we will build this project then it will create a “streaming.jar “executable file. |  |

|

| Spark project has 2 java files (NiftyBank.java, NiftyBankStockStreaming.java) and 1 build tool file (pom.xml). | ||

NiftyBank.java is using to create "JavaRDD" of the data CSV file.

|

||

| NiftyBankStockStreaming.java is the main spark streaming logic code file. | ||

| pom.xml contains external code dependencies and main class details. | ||

| For creating the "streaming.jar" file, use the command "mvn clean package". | ||

| Click Here To Download "SparkSystem" project zip file. | ||

4. Run The Project

| a. Client System | b. Spark System | |

|---|---|---|

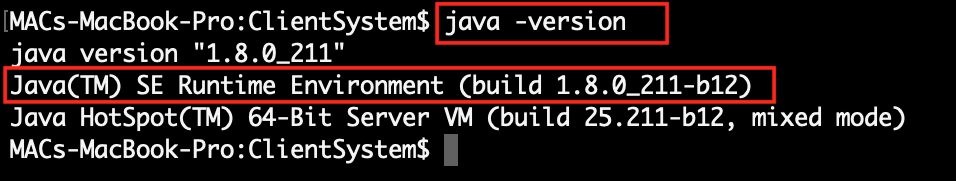

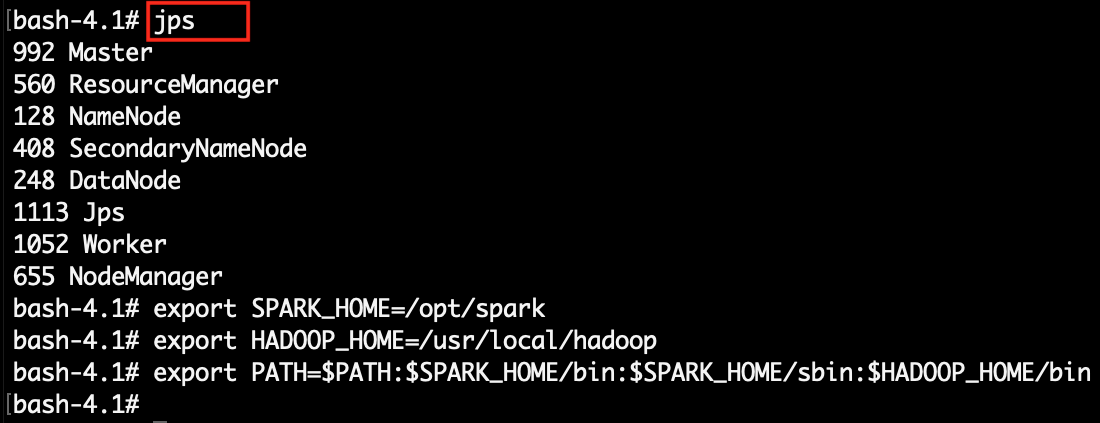

1. |

Verify Java is installed into the Client system. |

Verify all Hadoop & spark services are running on the Spark system. Also, check the export variable path for Hadoop & spark commands. |

|

|

|

2. |

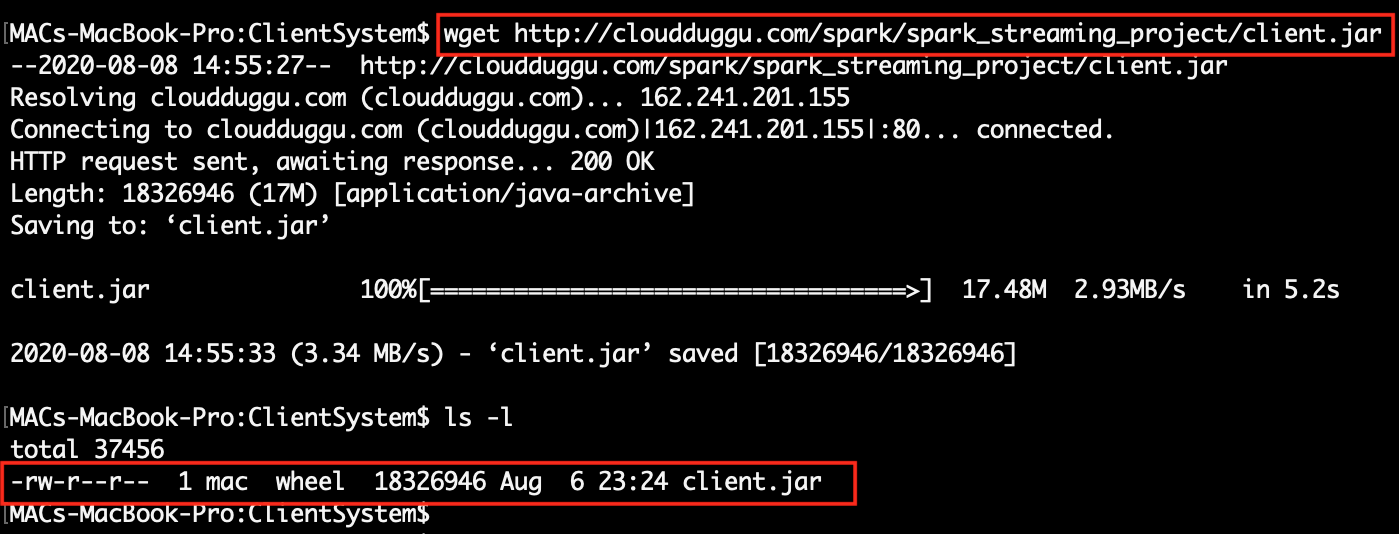

Download client.jar in the Client system. Click Here To Download "client.jar" executable jar file. |

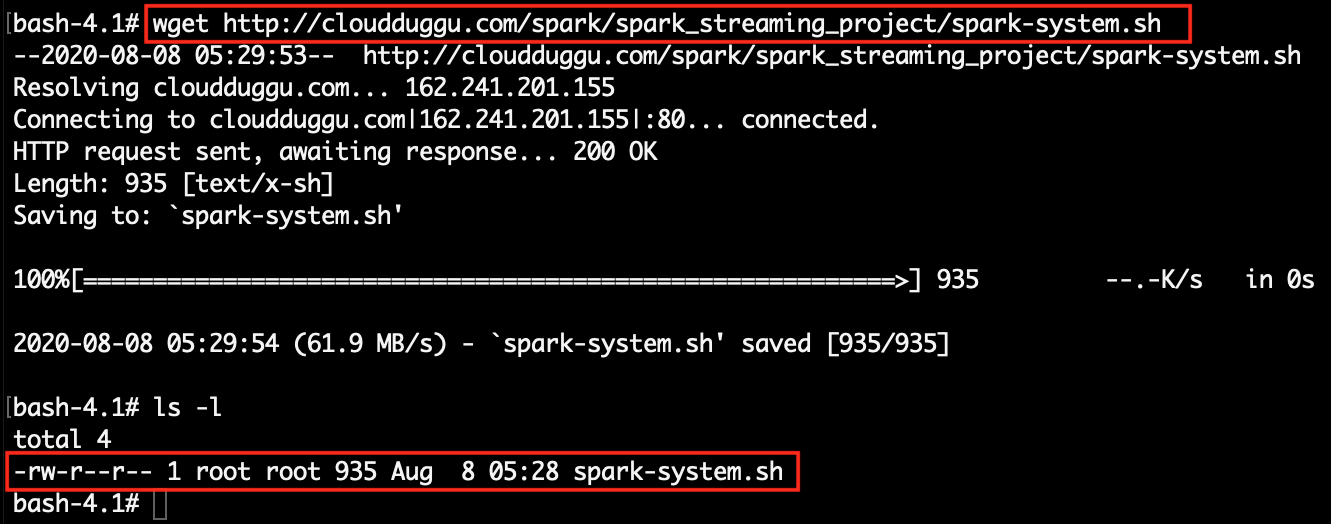

Download spark-system. sh shell script file in Spark system. Click Here To Download "spark-system.sh" shell script file. |

|

|

|

3. |

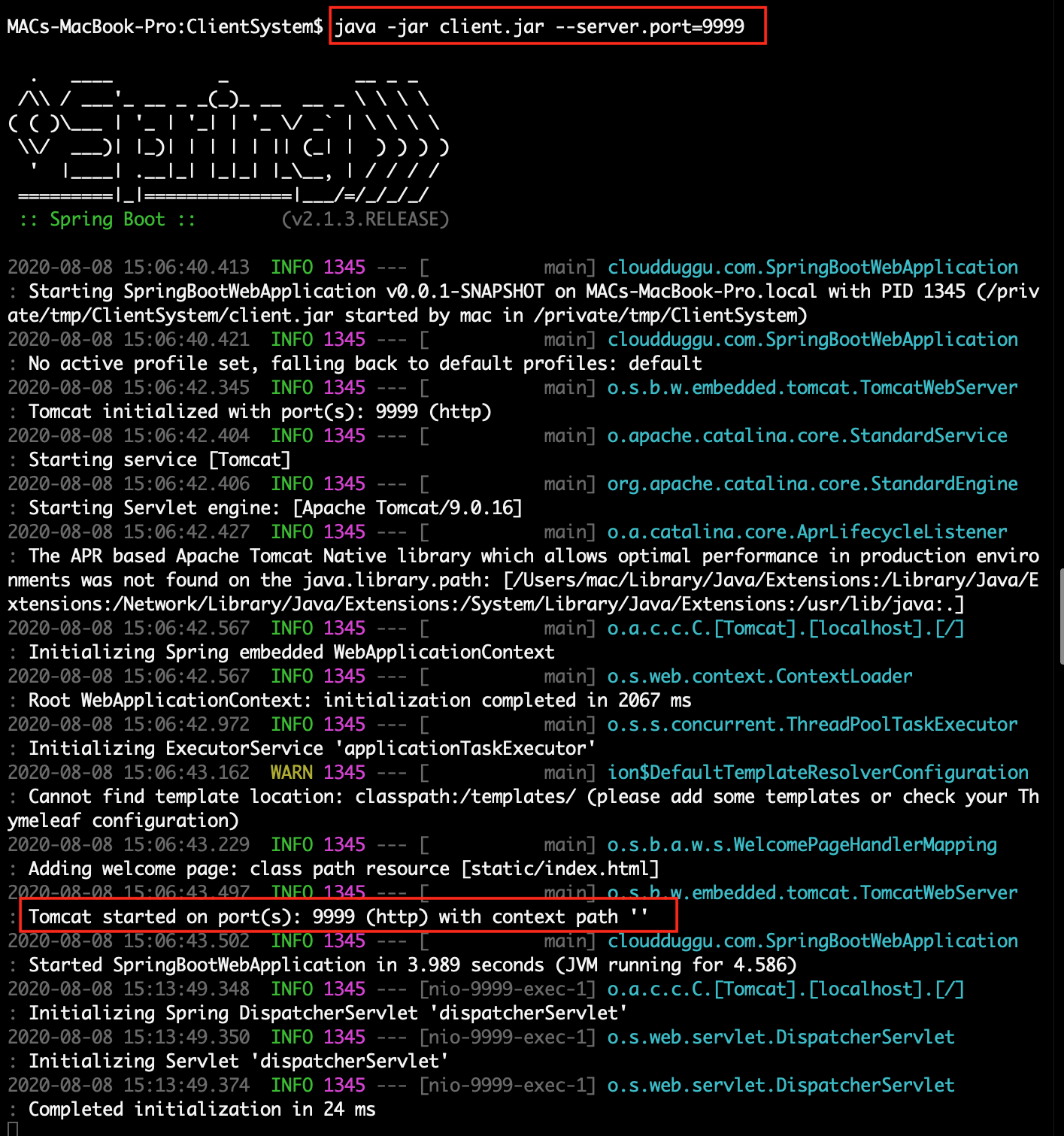

Run client.jar in the client system. At the execution time pass server port 9999. Here we can use a different port if the port already uses in the client system.

|

|

|

||

|

||

4. |

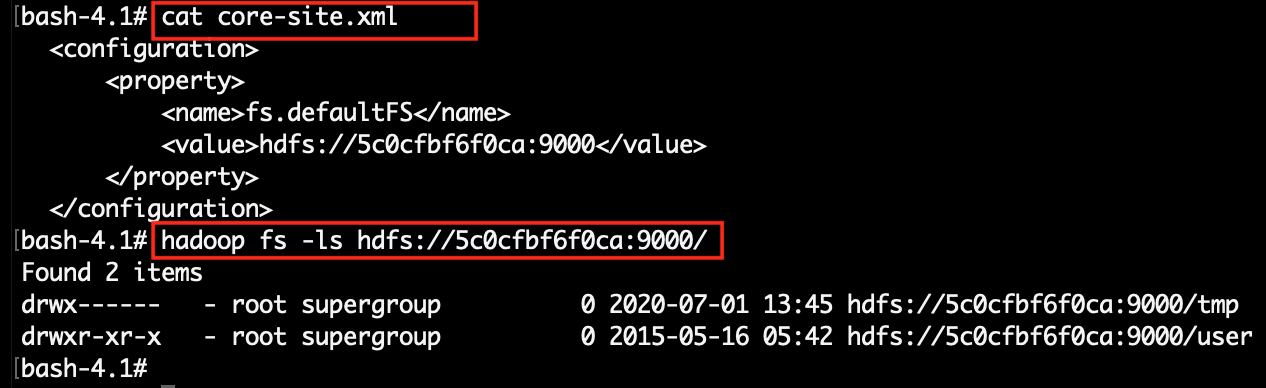

Read the Hadoop config core-site.xml file and find the Hadoop access port URL link.

|

|

|

||

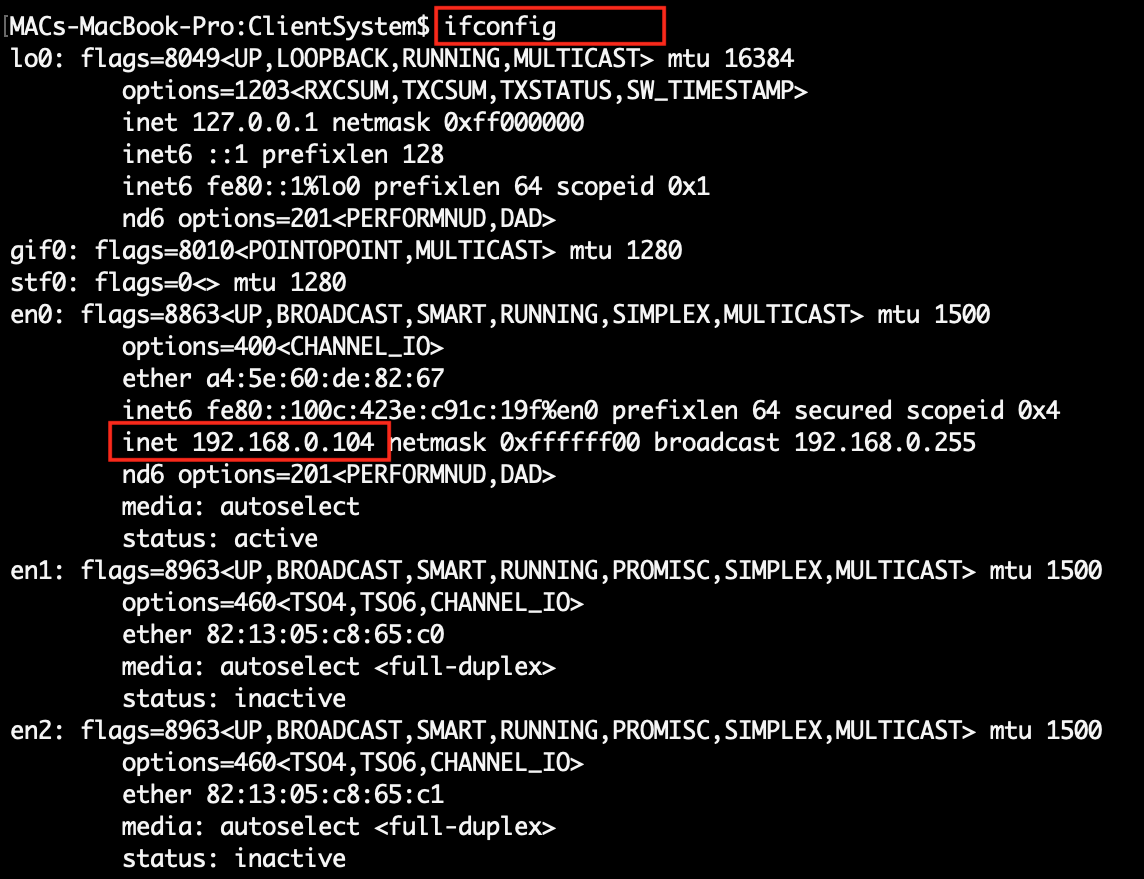

5. |

Find the client IP address that is accessible in the Spark system. | Run spark-system. sh shell script in the Spark system. At execution time pass hdfs link, client-ip & client-port.

|

|

|

|

|

|

|

6. |

Client system will automatically show the result as soon as Spark system uploads the result. | Spark system upload the result after successful execution of data files. |

|

|

5. Project Files Description In Detail

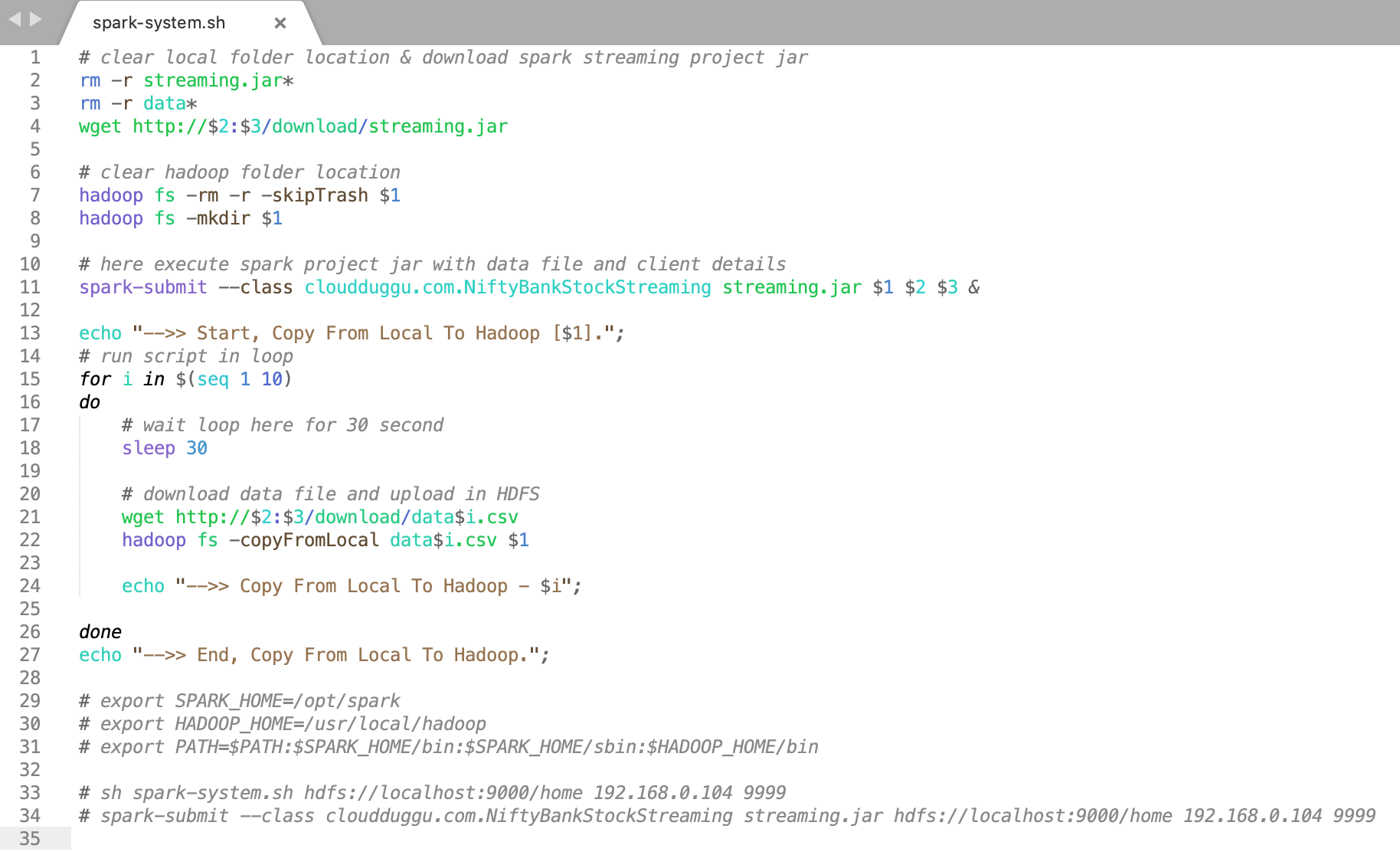

(i). spark-system.sh

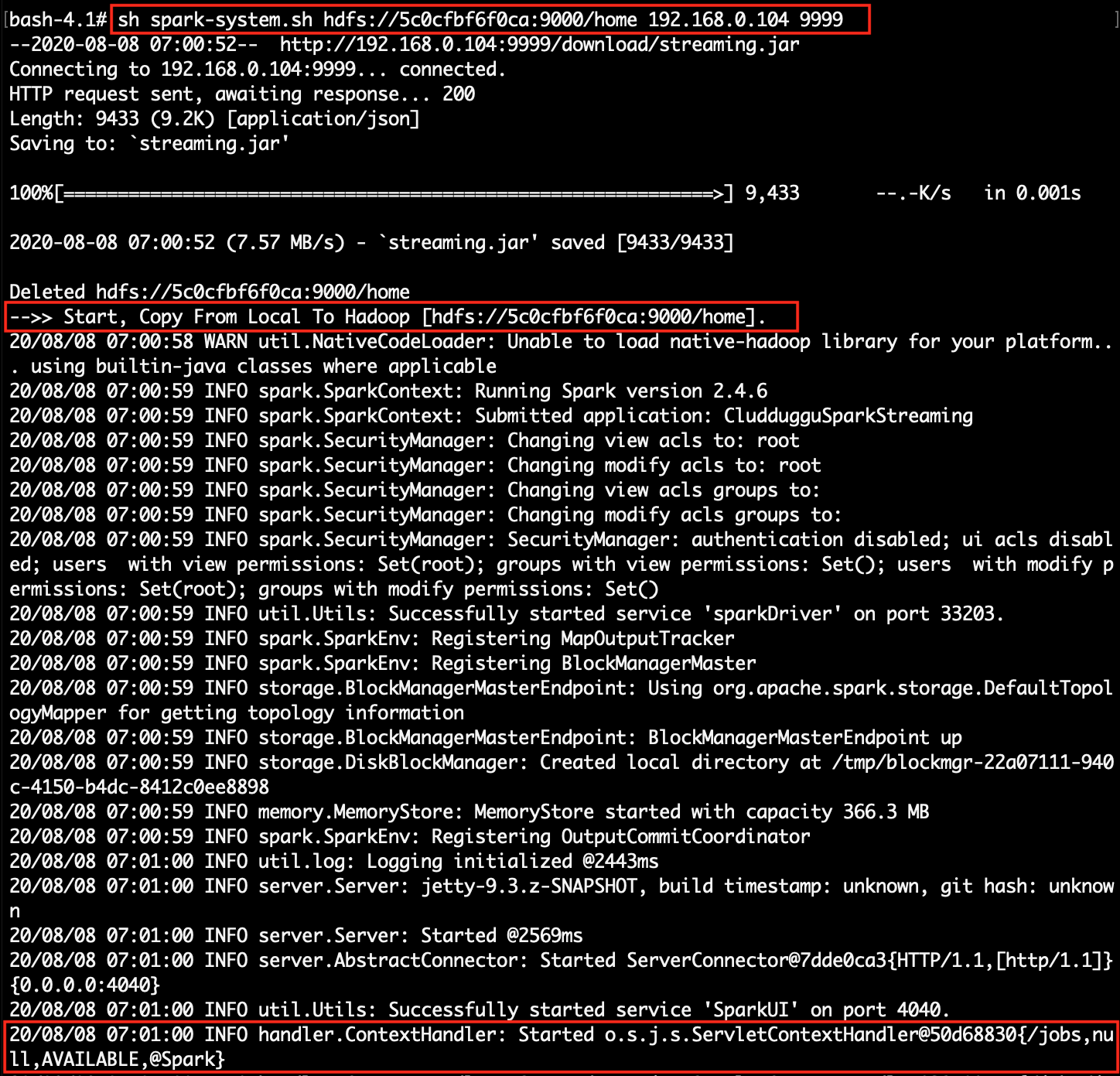

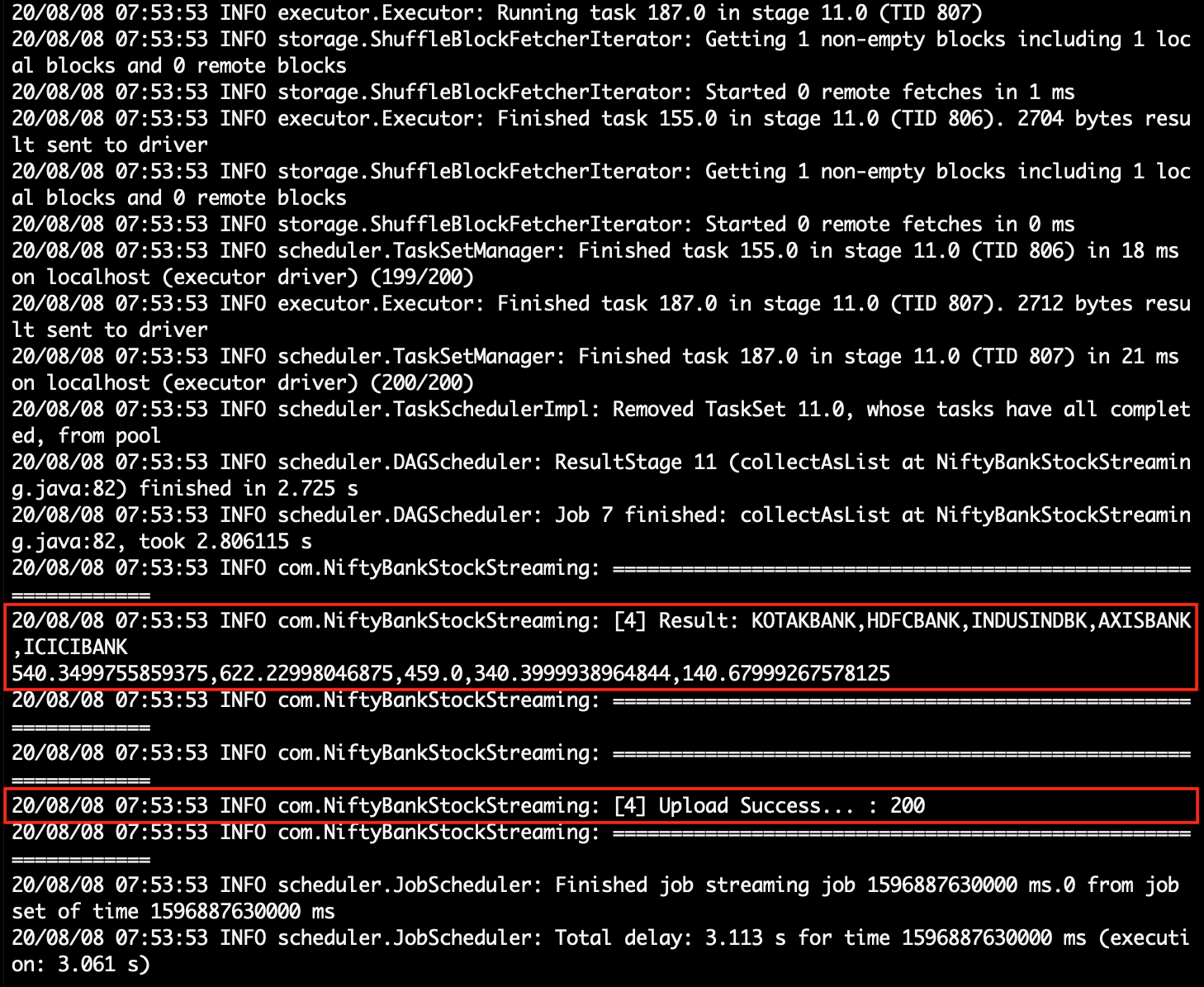

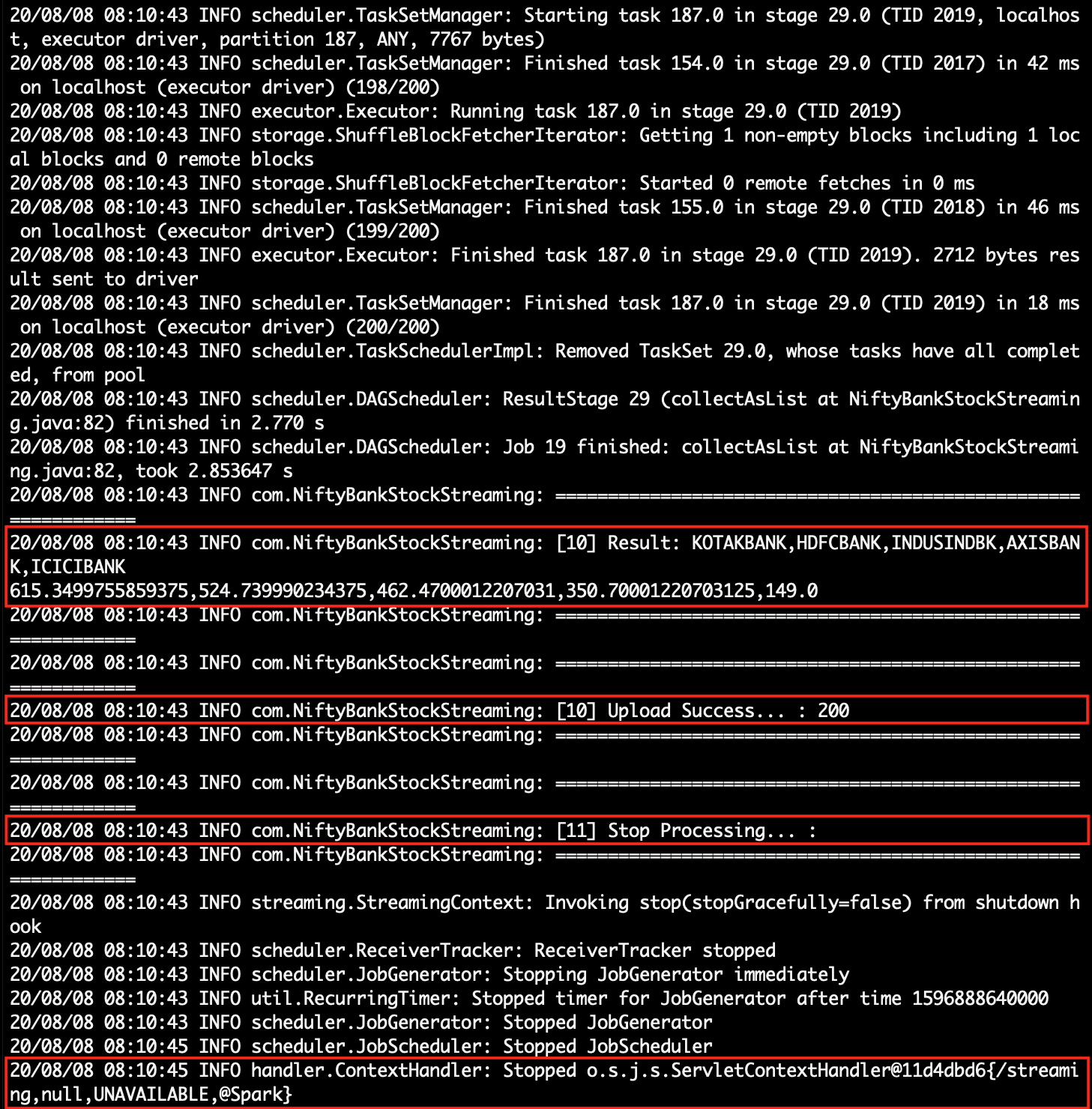

Using a shell script (spark-system. sh) we can easily run the Spark project jar in the Spark system.

spark-system. sh file is used to upload data files from the local system to HDFS in a loop. Loop will run 10 times and once it will finish, the spark system will end uploading data into HDFS.

spark-system.sh file has 3 input variables which are required during runtime.

The first variable is $1, used to obtain the HDFS access link from the command line.

The second variable is $2, used to obtain the client IP address from the command line.

The third variable is $3, used to obtain the client port number from the command line.

(sh spark-system.sh hdfs://5c0cfbf6f0ca:9000/home 192.168.0.104 9999 ): here "sh" is linux command,

"spark-system.sh" is a shell script file name, "hdfs://5c0cfbf6f0ca:9000/home" is a first variable, "192.168.0.104" is a second variable & "9999" is a third variable.

:) ...enjoy the spark streaming project.